Kubernetes集群-Harbor 资源清单

主机名 ip hosts解析 主机配置 系统版本

kubernetes-master 10.10.10.6 kubernetes-master、kubernetes-master.com 2c2g CentOS7.9

kubernetes-node1 10.10.10.7 kubernetes-node1、kubernetes-node1.com 2c2g CentOS7.9

kubernetes-node2 10.10.10.8 kubernetes-node2、kubernetes-node2.com 2c2g CentOS7.9

kubernetes-harbor 10.10.10.9 kubernetes-harbor、kubernetes-harbor.com 2c4g CentOS7.9

主机环境配置 1、配置免密登录 生成秘钥

1 [root@localhost ~]# ssh-keygen

发送秘钥至其他服务器

1 2 3 4 [root@localhost ~]# for i in 7 8 9 > do > ssh-copy-id root@10.10 .10 .$i > done

输入yes及密码

2、配置防火墙及swap分区 关闭本机防火墙

1 [root@localhost ~]# systemctl disable --now firewalld

关闭所有服务器防火墙

1 2 3 4 [root@localhost ~]# for i in 7 8 9 do @10 .10 .10 .$i "systemctl disable firewalld && systemctl stop firewalld "

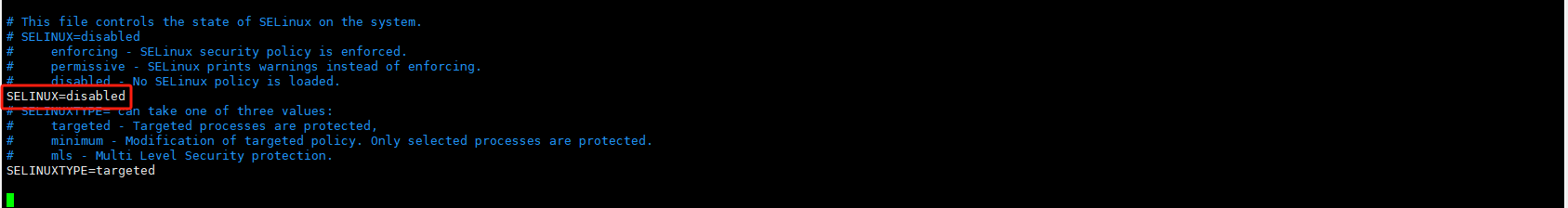

关闭所有服务器seliunx

1 [root@localhost ~]# setenforce 0

1 2 3 4 [root@localhost ~]# for i in 6 7 8 do @10 .10 .10 .$i "setenforce 0"

这里需要手动修改

发送到其他服务器

1 2 3 4 [root@localhost ~]do @10 .10.10 .$i :/etc/selinux/config

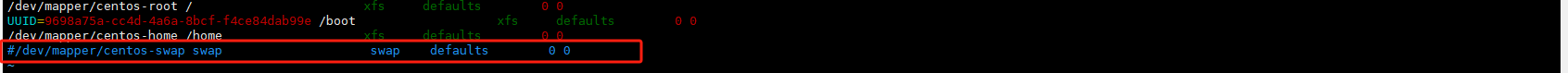

关闭swap分区

1 2 3 [root@localhost ~]# swapoff -a (临时关闭) ##永久关闭需要在/etc/fstab文件中注释掉 #/dev/mapper/centos-swap swap swap defaults 0 0

关闭node节点swap分区 harbor可以不关闭,他并不加入集群中,只是作为仓库使用

1 2 3 4 [root@kubernetes -master ~]# for i in 7 8 do @10 .10 .10 .$i "swapoff -a"

再去 node 节点把/etc/fstab文件中注释掉

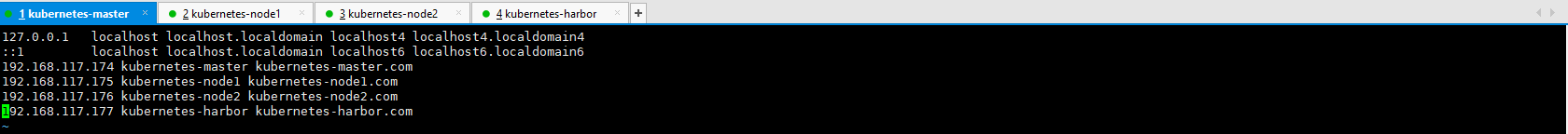

3、配置本地解析 1 [root@localhost ~]# vim /etc/hosts

1 2 3 4 10.10.10.6 kubernetes-master kubernetes-master.com10.10.10.7 kubernetes-node1 kubernetes-node1.com10.10.10.8 kubernetes-node2 kubernetes-node2.com10.10.10.9 kubernetes-harbor kubernetes-harbor.com

发送至node服务器

1 2 3 4 [root@localhost ~]do @10 .10.10 .$i :/etc/hosts

4、配置主机名 1 2 3 4 5 6 7 8 9 10 hostnamectl set-hostname kubernetes-master hostnamectl set-hostname kubernetes-node1 hostnamectl set-hostname kubernetes-node2 hostnamectl set-hostname kubernetes-harbor

5、开启路由转发功能 1 2 3 4 5 6 7 cat > /etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 vm.swappiness = 0 EOF

1 2 3 4 5 @kubernetes -master ~]do @10 .10.10 .$i :/etc/sysctl .d/k8s.conf

除 harbor服务器外所有节点操作

1 2 3 4 5 6 7 8 9 10 11 br_netfilter 22256 0 bridge 151336 1 br_netfilter bridge.bridge-nf-call-ip6tables = 1 bridge.bridge-nf-call-iptables = 1 1 swappiness = 0

6、安装docker 1 2 3 master ~]# curl https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker.repomaster ~]# yum -y install docker-ce

配置docker加速器及Cgroup驱动程序

1 2 3 4 5 6 7 8 9 10 [root@kubernetes-master ~]# cat > /etc/docker/daemon.json <<EOF "registry-mirrors" : ["https://docker.mirrors.ustc.edu.cn" ,"https://docker.m.daocloud.io" ,"http://hub-mirrors.c.163.com" ],"insecure-registries" : ["kubernetes-harbor.com" ],"data-root" : "/var/lib/docker" ,"exec-opts" : ["native.cgroupdriver=systemd" ]EOF

1 2 3 注: "insecure-registries" : ["kubernetes-harbor.com" ] 为自建harbor仓库地址"data-root" : "/var/lib/docker" 为docker家目录"exec-opts" : ["native.cgroupdriver=systemd" ] 含义:kubelet 将使用 systemd 作为其底层的 Cgroup 驱动程序

1 2 3 4 5 @kubernetes -master ~]do @10 .10.10 .$i :/etc/docker/daemon .json

所有节点启动docker并配置开机自启

1 [root@kubernetes-master ~]# systemctl daemon-reload && systemctl start docker && systemctl enable docker

7、安装cri-docker 1 2 3 4 5 6 7 8 9 10 11 12 13 14 @kubernetes -master ~]@kubernetes -master ~]@kubernetes -master ~]@kubernetes -master ~]0.3 .4 (e88b1605)@kubernetes -master ~]do @10 .10.10 .$i :/usr/bin

定制配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 Description =CRI Interface for Docker Application Container EngineDocumentation =https://docs.mirantis.comAfter =network-online.target firewalld.service docker.serviceWants =network-online.targetType =notifyExecStart =/usr/bin/cri-dockerd --pod-infra-container-image =registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 --network-plugin =cni --cni-conf-dir =/etc/cni/net.d --cni-bin-dir =/opt/cni/bin --container-runtime-endpoint =unix:///var/run/cri-dockerd.sock --cri-dockerd-root-directory =/var/lib/dockershim --docker-endpoint =unix:///var/run/docker.sock --cri-dockerd-root-directory =/var/lib/dockerExecReload =/bin/kill -s HUP $MAINPID TimeoutSec =0RestartSec =2Restart =alwaysStartLimitBurst =3StartLimitInterval =60sLimitNOFILE =infinityLimitNPROC =infinityLimitCORE =infinityTasksMax =infinityDelegate =yes KillMode =processWantedBy =multi-user.target

1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@kubernetes-master ~]# cat > /etc/systemd/system/cri-docker.socket << EOFDescription =CRI Docker Socket for the APIPartOf =cri-docker.serviceListenStream =/var/run/cri-dockerd.sockSocketMode =0660SocketUser =rootSocketGroup =dockerWantedBy =sockets.target

1 2 3 4 5 @kubernetes -master ~]do @10 .10.10 .$i :/etc/systemd/system/

1 2 3 4 master ~]# systemctl daemon-reload && systemctl start cri-docker && systemctl enable cri-dockermaster ~]# systemctl status cri-docker

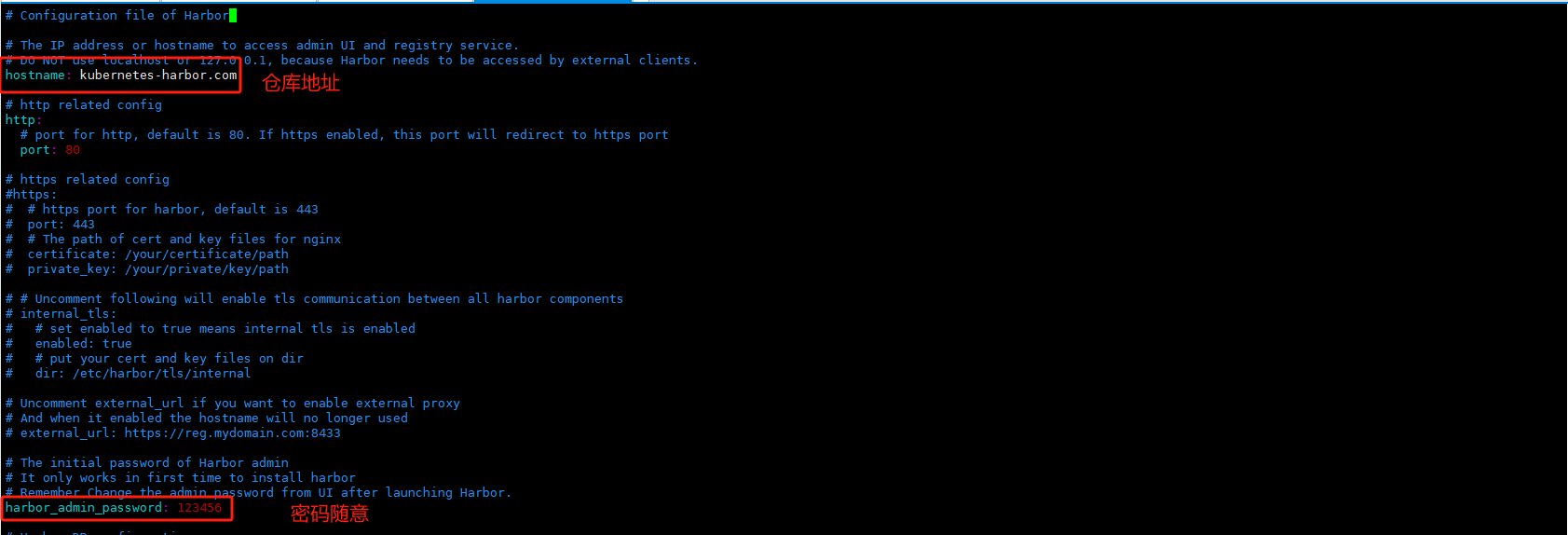

8、harboe仓库准备 1 2 3 ##安装docker-compose @kubernetes -harbor ~]# yum -y install epel-release @kubernetes -harbor ~]# yum -y install docker-compose

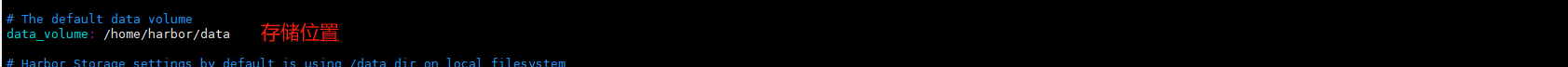

1 2 3 4 5 6 [root@kubernetes -harbor ~]# mkdir /home/harbor/data -p && cd /home/harbor @kubernetes -harbor harbor]# wget https://github.com/goharbor/harbor/releases/download/v2.7.3/harbor-offline-installer-v2.7.3.tgz @kubernetes -harbor harbor]# tar xvf harbor-offline-installer-v2.7.3.tgz @kubernetes -harbor harbor]# cd harbor @kubernetes -harbor harbor]# cp harbor.yml.tmpl harbor.yml @kubernetes -harbor harbor]# vim harbor.yml

1 2 3 4 ##安装harbor123456

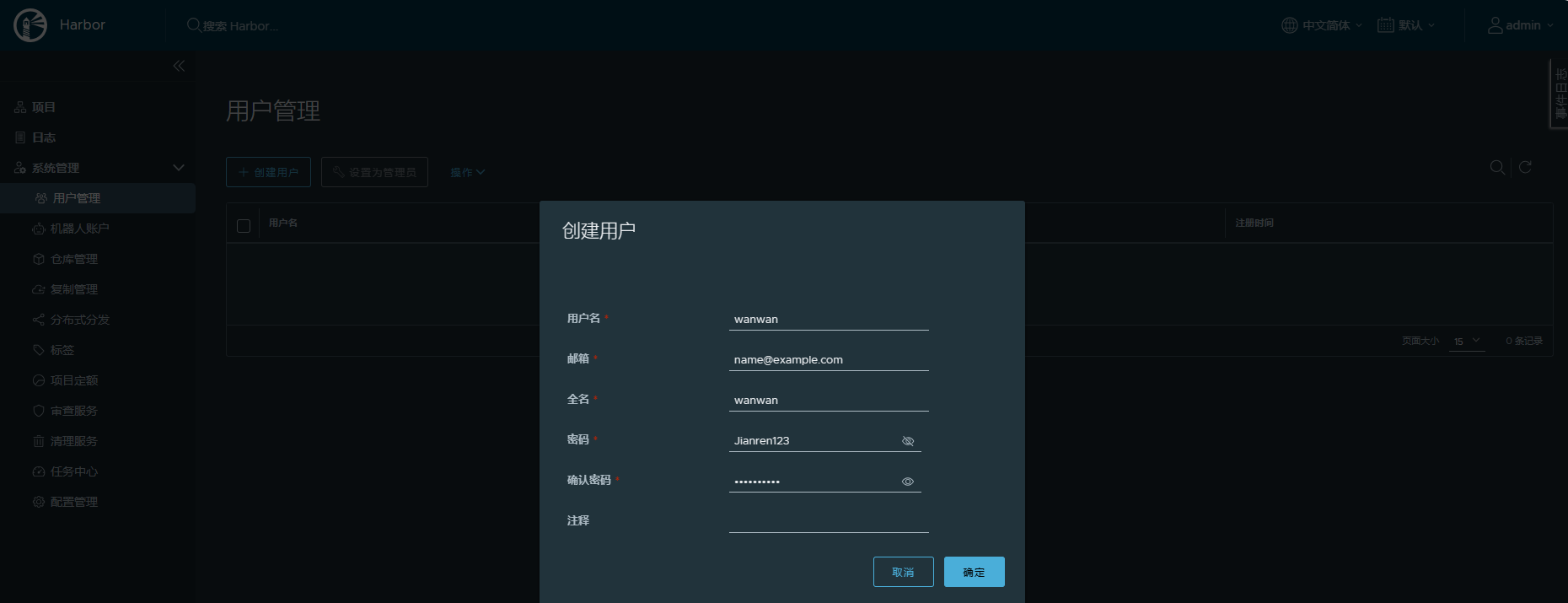

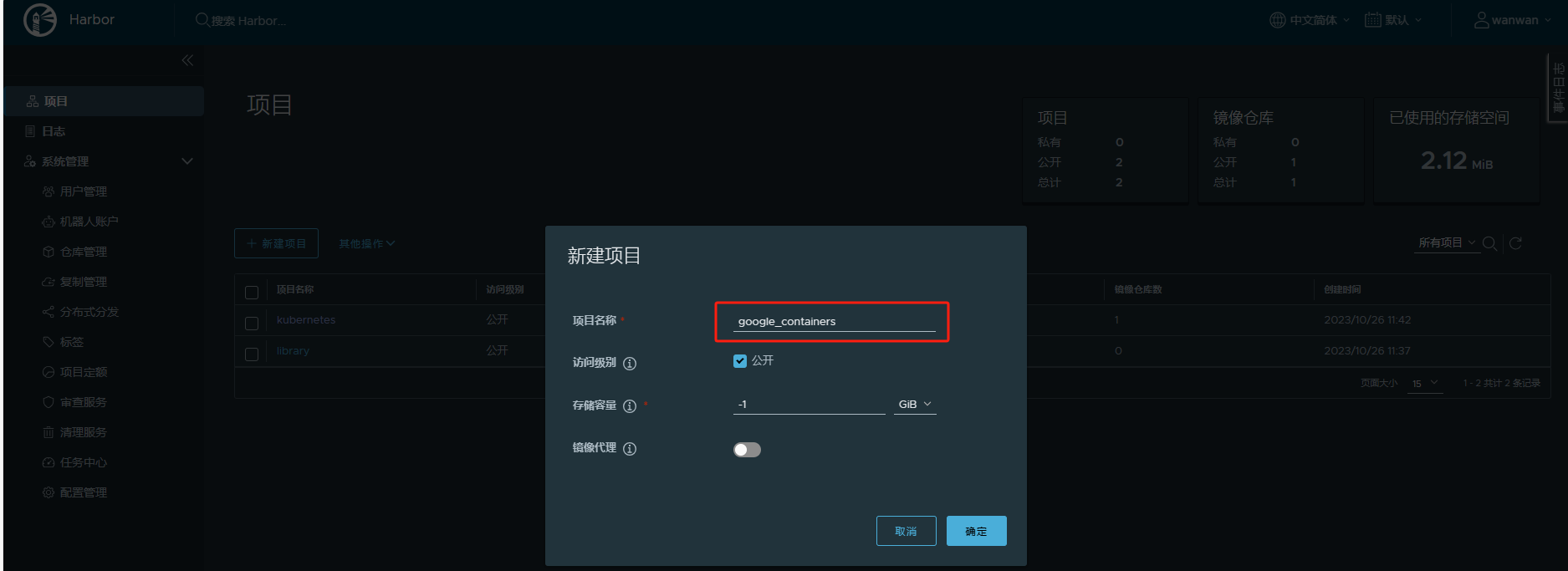

创建新的用户并登录

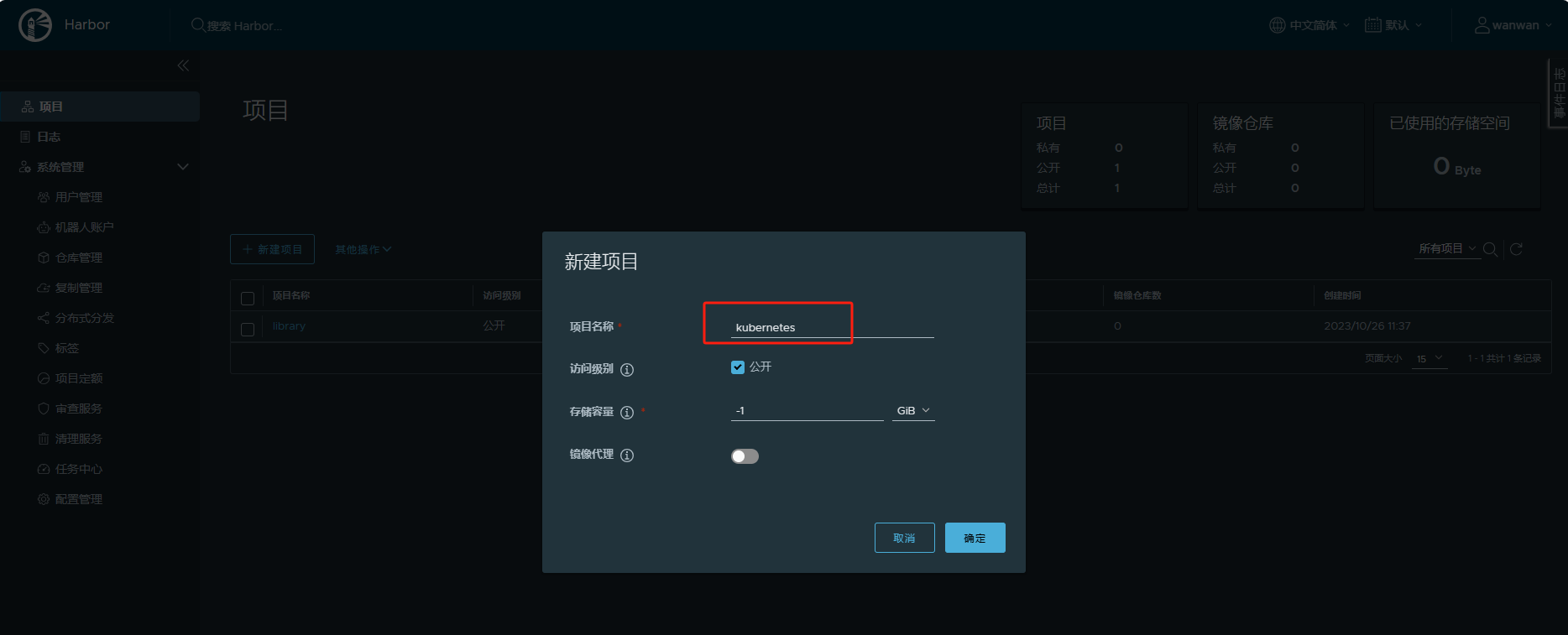

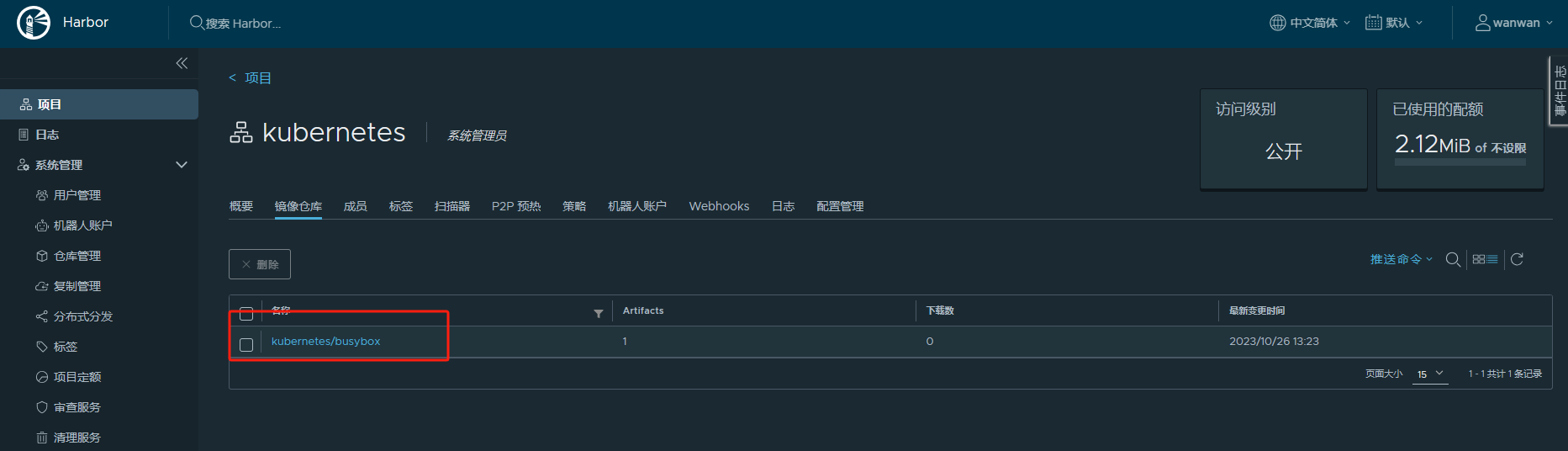

新建项目 用来存放kubernetes所需镜像

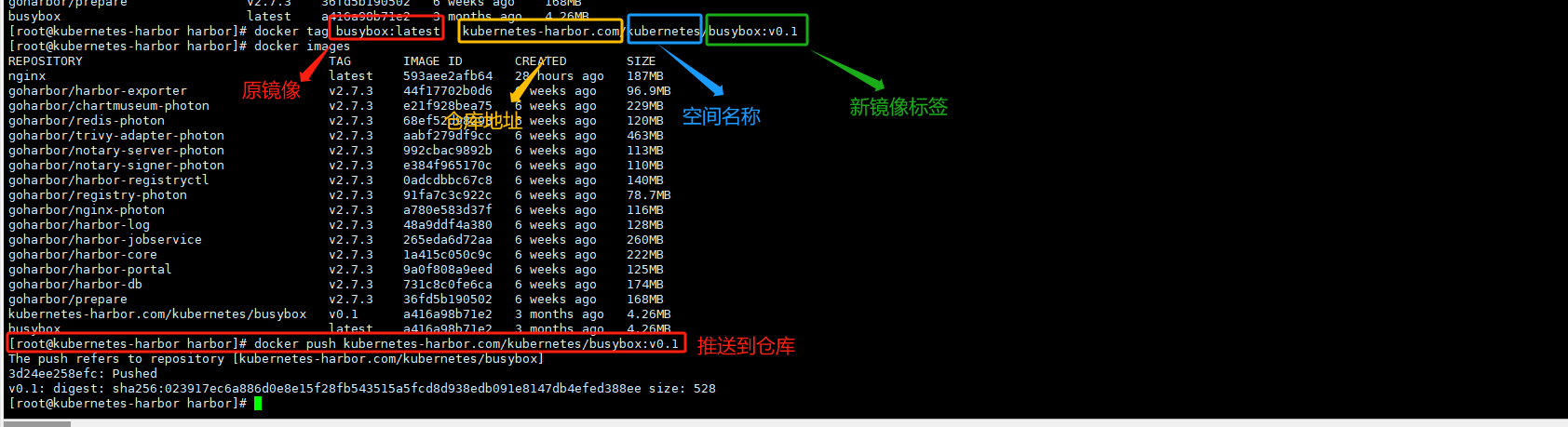

harbor仓库测试

1 2 3 4 5 6 7 8 9 10 11 12 ##登录仓库 @kubernetes -harbor harbor]# docker login kubernetes-harbor.com -u wanwan -p Jianren123 ##拉取镜像 @kubernetes -harbor harbor]# docker pull busybox ##修改标签 @kubernetes -harbor harbor]# docker tag busybox:latest kubernetes-harbor.com/kubernetes/busybox:v0.1 ##推送镜像到仓库 @kubernetes -harbor harbor]# docker push kubernetes-harbor.com/kubernetes/busybox:v0.1 ##访问可以看到已经推送到仓库 # 拉取测试 @kubernetes -node1 ~]# docker pull kubernetes-harbor.com/kubernetes/busybox:v0.1 @kubernetes -node2 ~]# docker pull kubernetes-harbor.com/kubernetes/busybox:v0.1

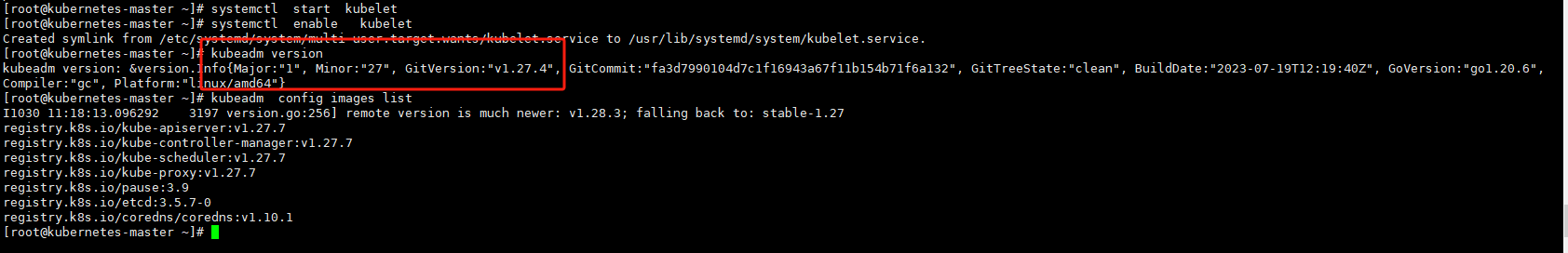

集群初始化 1、软件安装 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 master ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.reponame= Kubernetesbaseurl= https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled= 1 gpgcheck= 1 repo_gpgcheck= 1 gpgkey= https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgmaster ~]# yum makecache fastmaster ~]# yum -y install kubelet-1.27 .4 -0 kubeadm-1.27 .4 -0 kubectl-1.27 .4 -0 --disableexcludes= kubernetesmaster ~]# systemctl start kubelet master ~]# systemctl enable kubelet

1 2 3 4 5 master ~]# kubeadm version master ~]# kubeadm config images list

2、准备镜像 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 1.27 .4 |awk -F '/' '{print $NF}' )for i in ${images} do /google_containers/ $i /google_containers/ $i kubernetes-harbor.com/google_containers/ $i /google_containers/ $i /google_containers/ $i

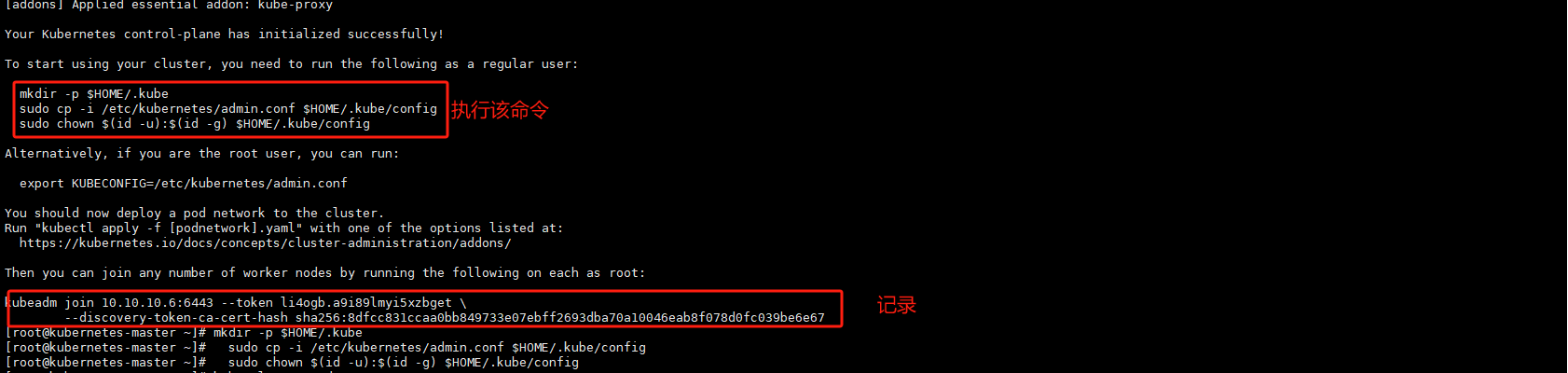

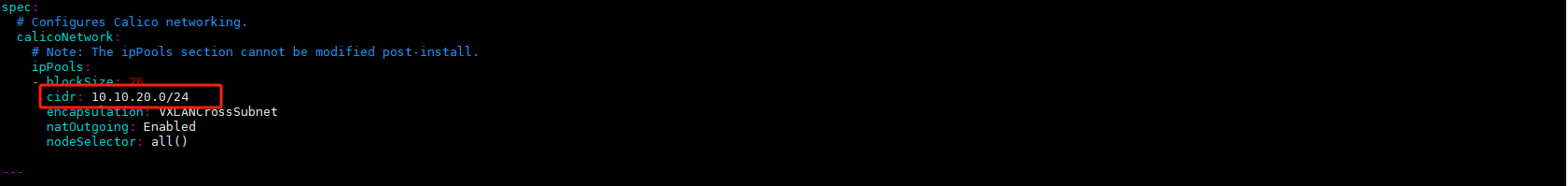

3、kubeadm初始化 1 2 3 4 5 [root@kubernetes-master ~] --kubernetes-version =1.27 .4 \--image-repository =kubernetes-harbor.com/google_containers \--pod-network-cidr =10.10 .20.0 /24 \--cri-socket =unix:///var/run/cri-dockerd.sock

1 2 3 [root@kubernetes-master ~]# mkdir -p $HOME /.kubesudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/configsudo chown $(id -u):$(id -g) $HOME /.kube/config

4、加入集群 1 2 3 4 5 :6443 \--token li4ogb.a9i89lmyi5xzbget \--discovery-token-ca-cert-hash sha256:8dfcc831ccaa0bb849733e07ebff2693dba70a10046eab8f078d0fc039be6e67 \--cri-socket unix:///var/run/cri-dockerd.sock

5、查看集群状态 1 2 3 4 5 [root@kubernetes-master ~]# kubectl get nodes VERSION master NotReady control-plane 5m 29s v1.27.4 <none> 4m 6s v1.27.4 <none> 3m 58s v1.27.4

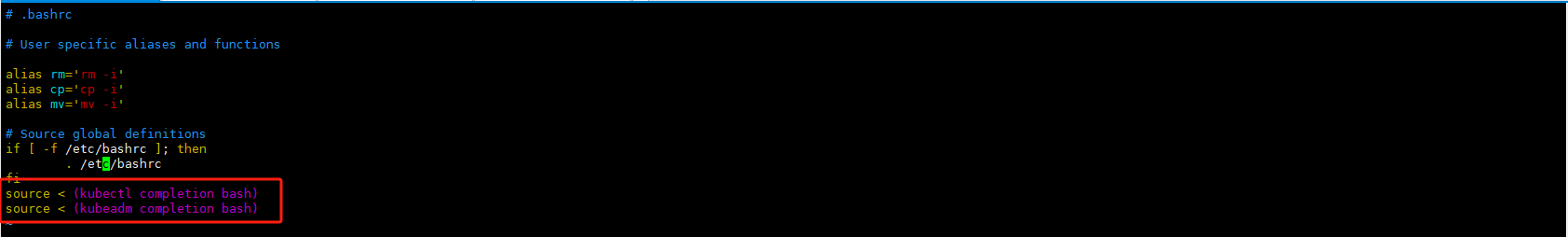

6、收尾工作 1 2 3 4 5 6 7 # 配置提示 @kubernetes -master ~]# vim .bashrc @kubernetes -master ~]# source .bashrc # 此时kubectl kubeadmin 按 tab 即可出现提示

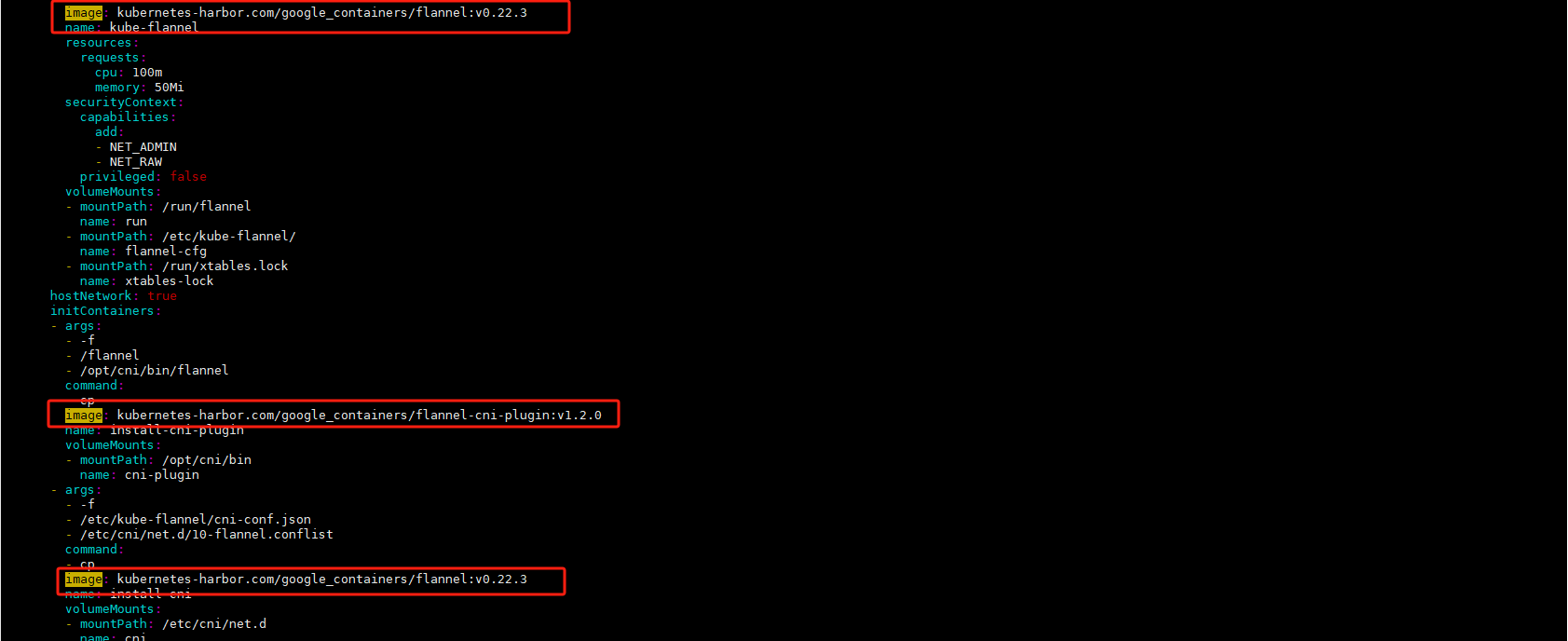

7、配置网络代理 二选一即可 推荐使用calico网络插件 1、网络组件flannel 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 [root@kubernetes-master ~]# mkdir /home/kubernetes/network/flannel -p && cd /home/kubernetes/network/flannelmaster flannel ]master flannel ]22.3 2.0 22.3 master flannel ]master flannel ]master flannel ]master flannel ]master flannel ]master flannel ]

修改三处为harbor仓库地址

1 [root@kubernetes-master flannel ]

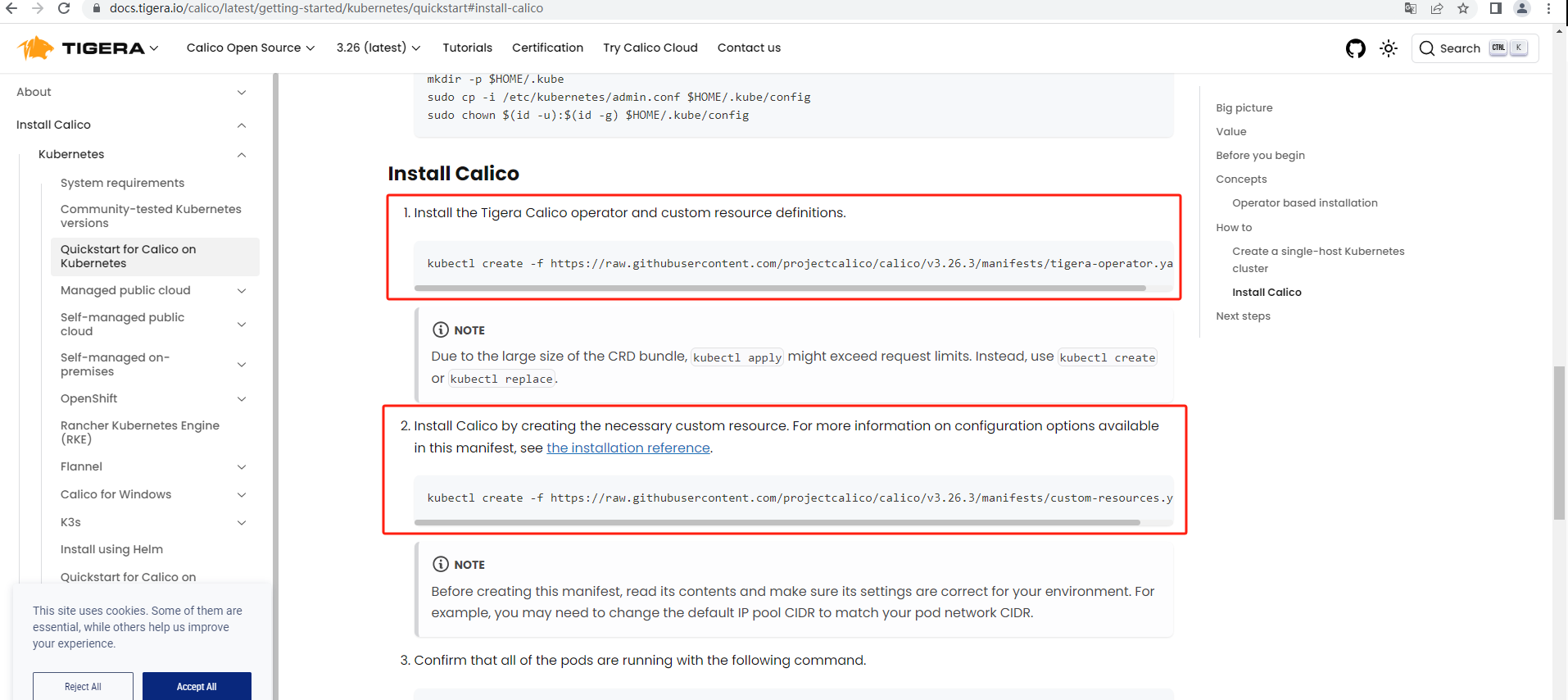

2、网络组件calico 1 calico官网:https:// docs.tigera.io/calico/ latest/getting-started/ kubernetes/quickstart

1 2 3 4 5 master flannel ]master flannel ]master flannel ]

验证集群可用性 1 2 3 4 5 [root@kubernetes-master ~]# kubectl get node NAME STATUS ROLES AGE VERSION master Ready control-plane 92m v1.27.4 <none> 90m v1.27.4 <none> 89m v1.27.4

查看集群健康情况,理想状态 1 2 3 4 5 6 [root@kubernetes-master ~]# kubectl get cs in v1.19+ERROR scheduler Healthy ok

查看kubernetes集群pod运⾏情况 1 2 3 4 5 6 7 8 9 10 11 [root@kubernetes-master ~]# kubectl get pod -n kube-system7 bdc4cb885-bx4cq 1 /1 Running 0 93m 7 bdc4cb885-th6ft 1 /1 Running 0 93m master 1 /1 Running 0 93m master 1 /1 Running 0 93m master 1 /1 Running 0 93m 1 /1 Running 0 91m 1 /1 Running 0 90m 1 /1 Running 0 93m master 1 /1 Running 0 93m