Kubernetes集群部署

本次通过kubeadm安装 1.29.2 版本

前言

1 2 3 4 k8s启动的流程 linux > docker > cri-docker > kberclt > AS/CM/SCH - AS apiserver - CM controller-manager - SCH scheduler

1 2 3 4 5 6 7 8 9 10 11 12 13 14 通过kubeadm的安装方式 - 简单 - 自愈 - 集群部署掩盖一些细节不便于理解 - 能够更灵活的配置集群 - 难理解

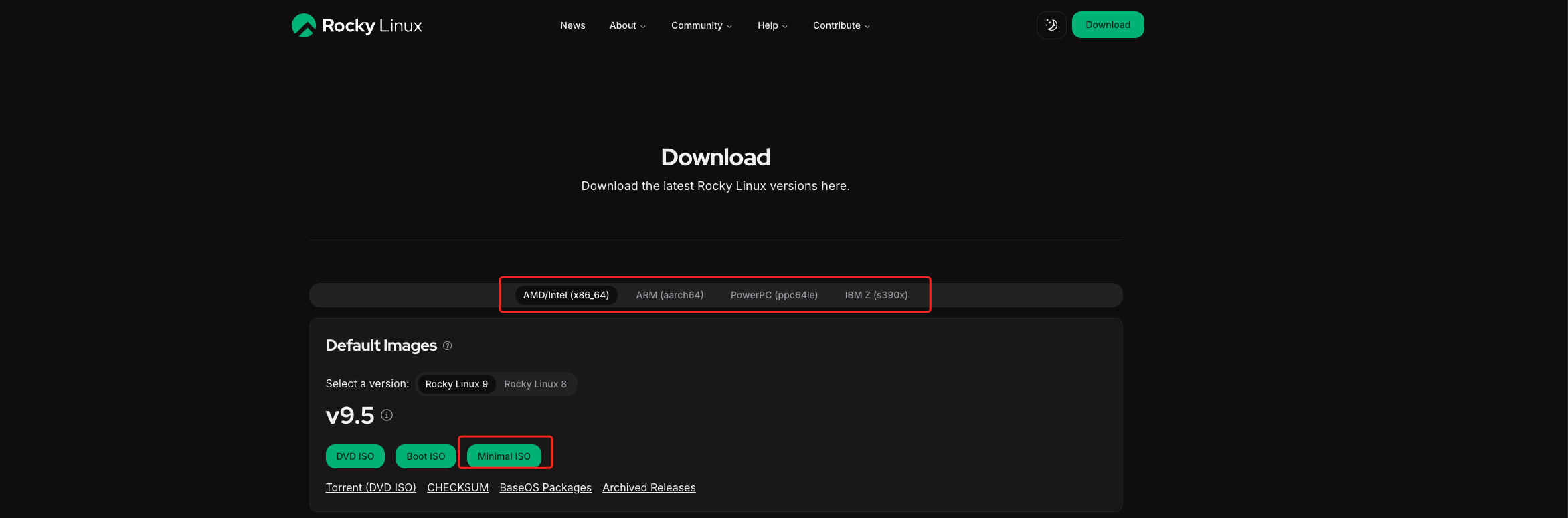

一、准备工作 下载rockylinux9镜像

官方地址

1 https: //rockylinux.org /zh -CN/download

根据系统架构选择镜像

阿里云镜像站地址

1 https ://mirrors.aliyun.com/rockylinux/9 /isos/x86_64/?spm=a2c6h.25603864 .0 .0 .29696621 VzJej5

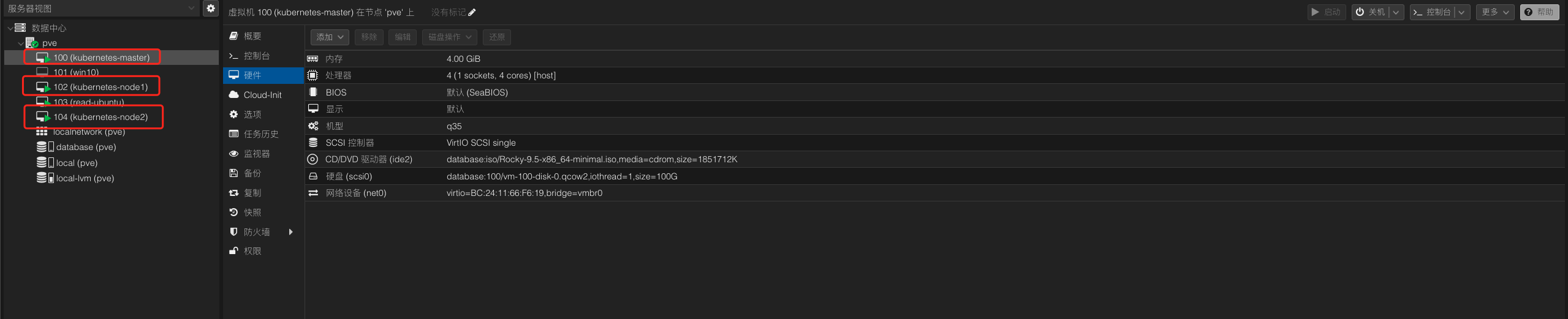

二、系统配置 系统核心数:4c 内存:4G 磁盘:100G 一主两从架构

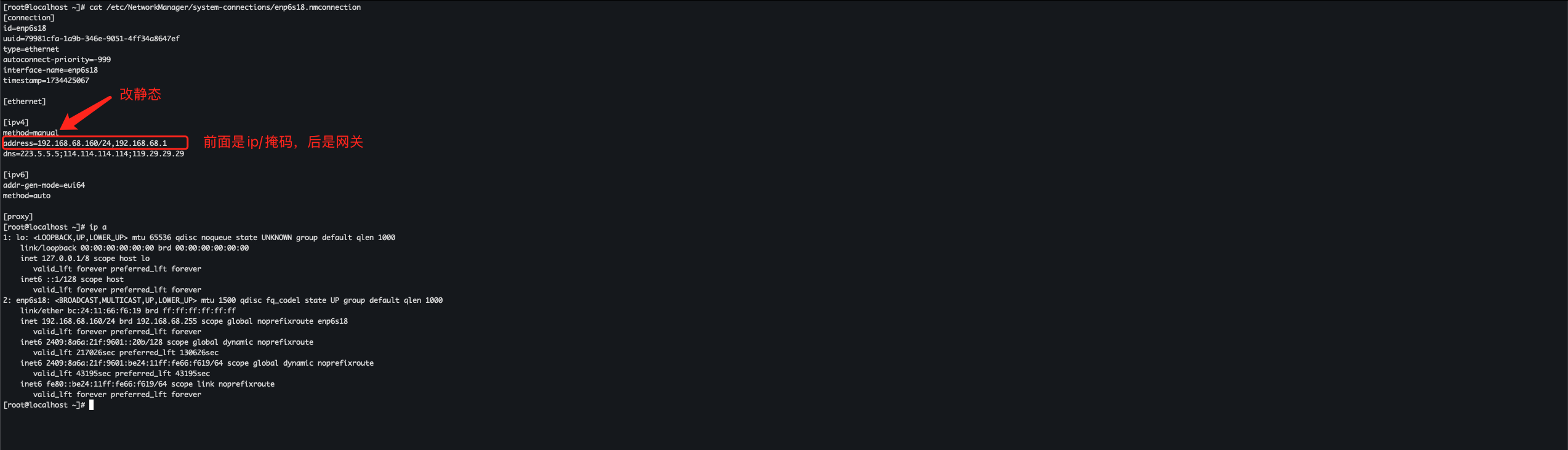

设备掩码255.255.255.0

设备网关192.168.68.1

设备IP master 192.168.68.160 node1 192.168.68.161 node2 192.168.68.162

三、环境初始化 固定所有设备的IP地址,配置仅需要修改ipv4块

1 2 3 4 5 6 [ipv4] method =manualaddress =192.168 .68.160 /24 ,192.168 .68.1 dns =223.5 .5.5

配置系统镜像源

1 https ://developer.aliyun.com/mirror/rockylinux?spm=a2c6h.13651102 .0 .0 .47731 b11u4haJL # 阿里云镜像源

1 2 3 4 5 6 sed -e 's|^mirrorlist =|#mirrorlist =|g ' \^#baseurl =http://dl.rockylinux.org/$contentdir|baseurl =https://mirrors.aliyun.com/rockylinux|g ' \

关闭firewalld防火墙启用iptables

1 2 systemctl stop firewallddisable firewalld

1 2 3 4 5 yum -y install iptables-servicesenable iptablesservice iptables save

禁用selinux

1 2 setenforce 0 sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

关闭swap分区

1 2 swapoff - a- i 's:/dev/ mapper/rl-swap:#/ dev/mapper/ rl- swap:g' /etc/ fstab

修改主机名

1 2 3 4 hostnamectl set-hostname k8s-master hostnamectl set-hostname k8s-node01 hostnamectl set-hostname k8s-node02 bash

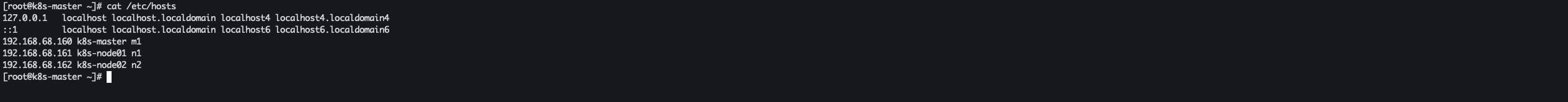

配置本地解析

1 2 3 4 vi /etc/hosts192.168.68.160 k8s-master m1192.168.68.161 k8s-node01 n1192.168.68.162 k8s-node02 n2

安装ipvs

开启路由转发

1 2 echo 'net.ipv4.ip_forward=1' >> /etc/sysctl.conf

加载 bridge

1 2 3 4 5 6 7 yum install -y epel-releaseecho 'br_netfilter' >> /etc/modules-load.d/bridge.confecho 'net.bridge.bridge-nf-call-iptables=1' >> /etc/sysctl.confecho 'net.bridge.bridge-nf-call-ip6tables=1' >> /etc/sysctl.conf

安装docker

1 2 3 4 5 6 7 8 9 10 11 dnf config-manager --add-repo https://mi rrors.ustc.edu.cn/docker-ce/ linux/centos/ docker-ce.repo's|download.docker.com|mirrors.ustc.edu.cn/docker-ce|g' /etc/yum .repos.d/docker-ce.repo//mi rrors.aliyun.com/docker-ce/ linux/centos/ docker-ce.repo's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum .repos.d/docker-ce.repo

配置docker

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 cat > /etc/docker/daemon.json <<EOF"data-root" : "/data/docker" ,"exec-opts" : ["native.cgroupdriver=systemd" ] ,"log-driver" : "json-file" ,"log-opts" : {"max-size" : "100m" ,"max-file" : "100" "insecure-registries" : ["harbor.xinxainghf.com" ] ,"registry-mirrors" : ["https://proxy.1panel.live" ,"https://docker.1panel.top" ,"https://docker.m.daocloud.io" ,"https://docker.1ms.run" ,"https://docker.ketches.cn" ] p /etc/systemd/system/docker.service .d

重启设备

安装cri-docker

1 2 yum -y install wget wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3 .9 /cri-dockerd-0 .3 .9 .amd64.tgz

1 2 3 4 @n1 :/usr/bin/cri-dockerd @n2 :/usr/bin/cri-dockerd

配置 cri-docker 服务(这块仅在master设备上执行)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 cat <<"EOF" > /usr/lib/systemd/system/cri-docker.serviceDescription =CRI Interface for Docker Application Container EngineDocumentation =https://docs.mirantis.comAfter =network-online.target firewalld.service docker.serviceWants =network-online.targetRequires =cri-docker.socketType =notifyExecStart =/usr/bin/cri-dockerd --network-plugin =cni --pod-infra-container-image =registry.aliyuncs.com/google_containers/pause:3.8ExecReload =/bin/kill -s HUP $MAINPID TimeoutSec =0RestartSec =2Restart =alwaysStartLimitBurst =3StartLimitInterval =60sLimitNOFILE =infinityLimitNPROC =infinityLimitCORE =infinityTasksMax =infinityDelegate =yes KillMode =processWantedBy =multi-user.target

1 2 3 4 5 6 7 8 9 10 11 12 cat <<"EOF" > /usr/lib/systemd/system/cri-docker.socketDescription = CRI Docker Socket for the APIPartOf = cri-docker.serviceListenStream = %t/cri-dockerd.sockSocketMode = 0660 SocketUser = rootSocketGroup = dockerWantedBy = sockets.target

1 2 3 4 5 scp /usr/lib/systemd/system/cri-docker.service root@n1 :/usr/lib/systemd/system/cri-docker .service@n2 :/usr/lib/systemd/system/cri-docker .service@n1 :/usr/lib/systemd/system/cri-docker .socket@n2 :/usr/lib/systemd/system/cri-docker .socket

启动cri-docker

1 2 3 4 5 system ctl daemon-reloadsystem ctl enable cri-dockersystem ctl start cri-dockersystem ctl is-active cri-docker

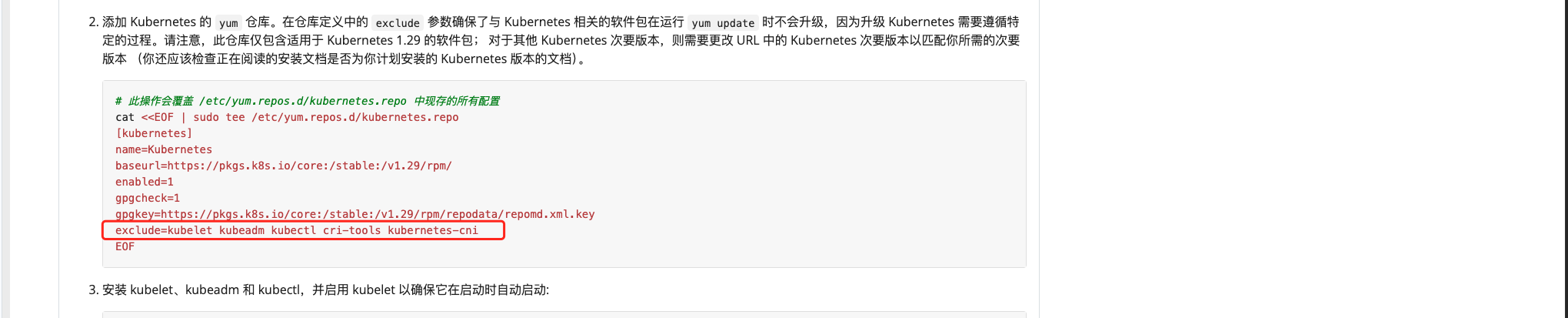

添加kuberadm源

1 https:// v1-29 .docs.kubernetes.io/zh-cn/ docs/setup/ production-environment/tools/ kubeadm/install-kubeadm/

1 2 3 4 5 6 7 8 9 cat <<EOF > /etc/yum.repos.d/kubernetes.reponame =Kubernetesbaseurl =https://pkgs.k8s.io/core:/stable:/v1.29/rpm/enabled =1gpgcheck =1gpgkey =https://pkgs.k8s.io/core:/stable:/v1.29/rpm/repodata/repomd.xml.key

安装kubeadm 1.29.2 版本

1 2 yum install -y kubelet-1 .29 .2 kubectl-1 .29 .2 kubeadm-1 .29 .2 systemctl enable kubelet.service

安装完成之后取消exclude的跳过注释,防止被update升级

1 sed -i 's/\# exclude/exclude/' /etc/yum.repos .d/kubernetes.repo

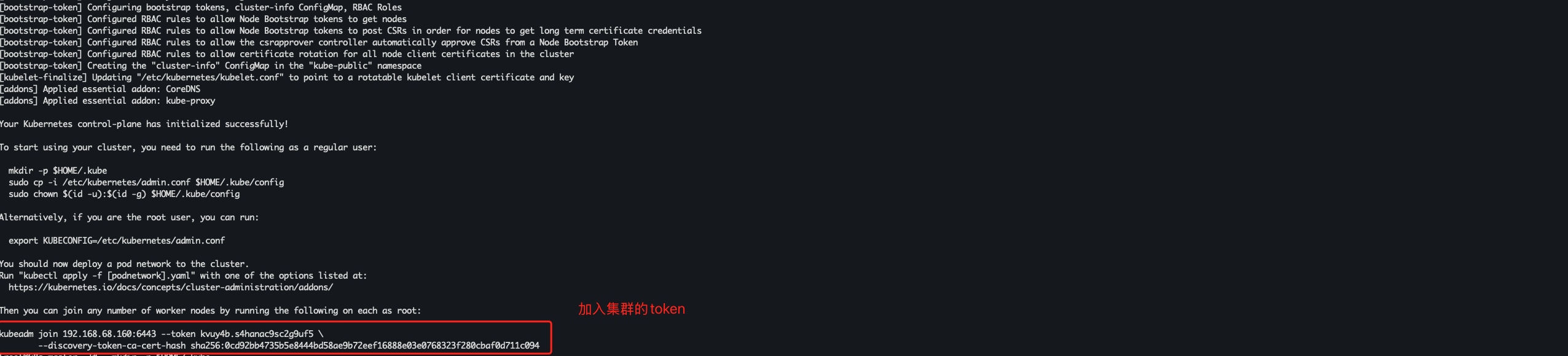

四、集群初始化 初始化master

1 2 kubeadm init --apiserver-advertise-address=192.168.68.160 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version 1 .29 .2 --service-cidr=10.10.0.0 /12 --pod-network-cidr=10.244.0.0 /16 --ignore-preflight-errors=all --cri-socket unix:///var/run/cri-dockerd.sock

创建kubernetes证书目录

1 2 3 mkdir -p $HOME /.kubesudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/configsudo chown $(id -u):$(id -g) $HOME /.kube/config

node节点加入集群

1 2 # node 节点执行,复制集群初始化的token 命令时间记得加 --cri-socket unix:token kvuy4b.s4hanac9sc2g9uf5 --discovery-token -ca -cert-hash sha256:0cd92bb4735b5e8444bd58ae9b72eef16888e03e0768323f280cbaf0d711c094 --cri-socket unix:

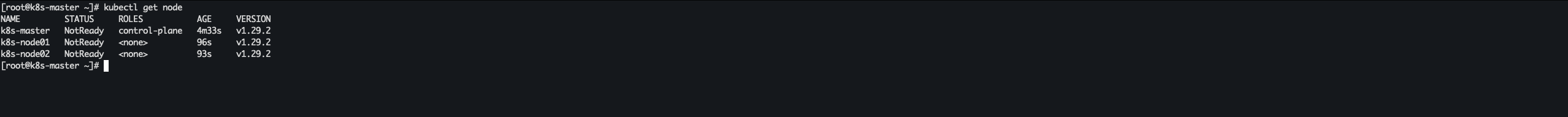

验证node节点是否加入集群

如果 token过期 重新申请

1 kubeadm token create --print -join-command

五、部署网络calico插件 官方文档

1 https:// docs.tigera.io/calico/ latest/getting-started/ kubernetes/self-managed-onprem/ onpremises

导入镜像

1 2 3 4 5 6 7 8 9 10 11 12 wget https://alist.wanwancloud.cn/d/%E8%BD%AF%E4%BB%B6/Kubernetes/Calico/calico.zip?sign=45 yzzkju25P3oo3e7-D7w4A38_0Ug50Xag585imOt-0 =:0 -O calico.zipyum -y install unzip unzip calico.zipcd calicotar xf calico-images.tar.gzscp -r calico-images root@n1:/rootscp -r calico-images root@n2:/rootdocker load -i calico-images/calico-cni-v3.26 .3 .tardocker load -i calico-images/calico-kube-controllers-v3.26 .3 .tardocker load -i calico-images/calico-node-v3.26 .3 .tardocker load -i calico-images/calico-typha-v3.26 .3 .tar

导入镜像

1 2 3 4 5 docker load -i calico-images/calico-cni-v3.26 .3 .tardocker load -i calico-images/calico-kube-controllers-v3.26 .3 .tardocker load -i calico-images/calico-node-v3.26 .3 .tardocker load -i calico-images/calico-typha-v3.26 .3 .tar

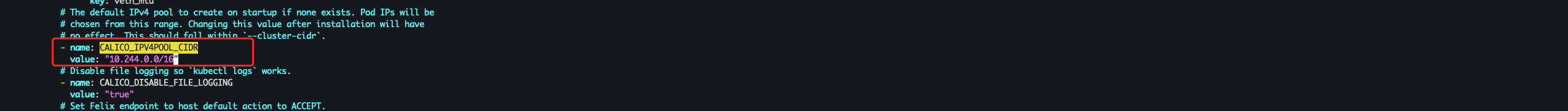

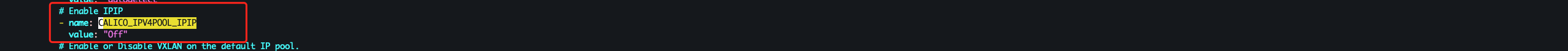

修改yaml文件

1 2 3 CALICO_IPV4POOL_CIDR 指定为 pod 地址 也是就是初始化集群时的地址 --pod-network-cidr=10.244.0.0 /16 "10.244.0.0/16"

1 2 3 CALICO_IPV4POOL_IPIP 修改为 BGP 模式 value: "Always" #改成Off

启用calico网络插件

1 kubectl apply -f calico-typha.yaml

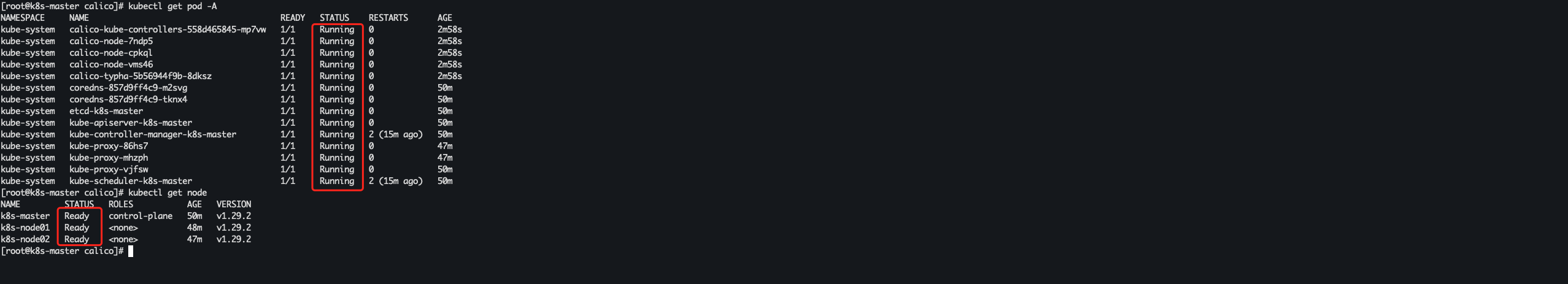

稍等一段时间 组件全部 Running 集群节点 Ready 就绪 集群就搭建成功了

1 2 kubectl get pod -Aget node

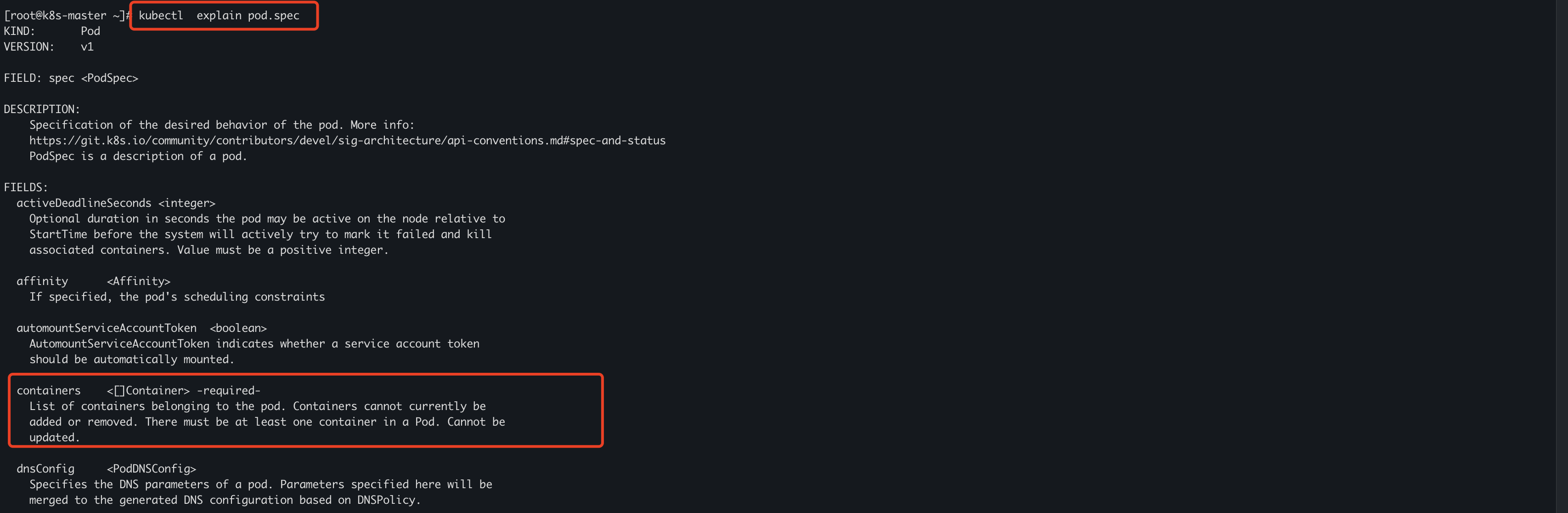

资源清单 一、资源清单详解 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 [root@k8s-master ~]# kubectl api-versions

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 apiVersion: v1 kind: Pod metadata: name: pod-demo namespace: default labels: app: myapp spec: containers: - name: myapp-1 image: wangyanglinux/myapp:v1.0 - name: buxybox-1 image: wangyanglinux/tools:busybox command: - "/bin/sh" - "-c" - "sleep 3600" status: conditions: - lastProbeTime: null lastTransitionTime: "2024-12-20T08:49:07Z" status: "True" type: Initialized - lastProbeTime: null

二、创建资源 编写资源清单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 [root@k8s-master ~]# cat >> pod.yml <<EOF apiVersion : v1 kind : Pod metadata : name : pod-demo namespace : default labels : app : myapp spec : containers : - name: myapp-1 image : wangyanglinux/myapp:v1.0 - name: buxybox-1 image : wangyanglinux/tools:busybox command : - "/bin/sh" - "-c" - "sleep 3600" status : conditions : - lastProbeTime: null lastTransitionTime : "2024-12-20T08:49:07Z" status : "True" type : Initialized - lastProbeTime: null

把期望资源清单提交k8s集群,帮我们进行对应的生产制造 进行实例化

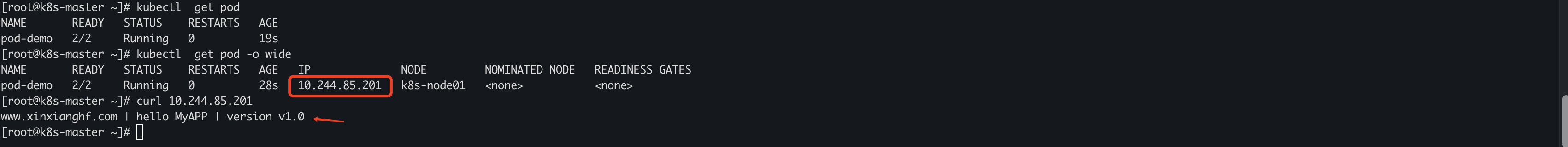

1 [root@k8s-master ~]# kubectl create -f pod.yml

1 2 3 4 5 6 7 8 9 [root@k8s-master ~]# kubectl get pod 0 /2 ContainerCreating 0 5s master ~]# kubectl get pod2 /2 Running 0 19s master ~]# kubectl get pod -o wide NODE NOMINATED NODE READINESS GATES2 /2 Running 0 28s 10.244 .85.201 k8s-node01 <none> <none>

**根据上面详细信息 NODE k8s-node01 可以获取到当前 pod 的所在节点 **

1 2 3 4 5 6 登陆 nod01 设备执行命令 获取容器信息 可以得出概念 pod 是由多个容器组成在一起的 pause k8s_myapp k8s_buxybox 三个容器构建成的 pod -1 _pod-demo_default_37ceeb28-143 a-4 f02-9 f2f-6 cfdbe25dc1f_0-1 _pod-demo_default_37ceeb28-143 a-4 f02-9 f2f-6 cfdbe25dc1f_0-143 a-4 f02-9 f2f-6 cfdbe25dc1f_0

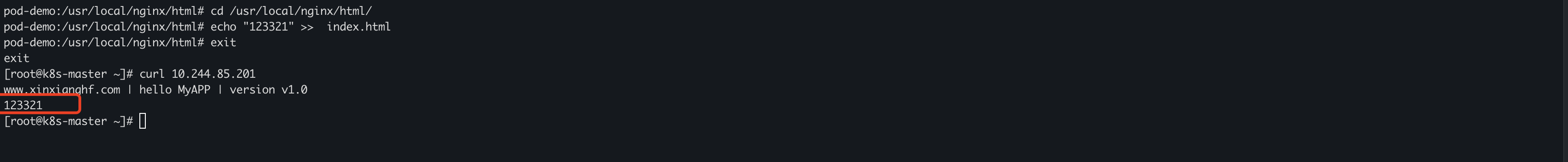

三、修改Pod内容器信息 1 2 3 4 5 [root@k8s-master ~]# kubectl get pod -o wideNODE NOMINATED NODE READINESS GATES2 /2 Running 0 28s 10.244 .85.201 k8s-node01 <none> <none> master ~]# curl 10.244 .85.201 version v1.0

** 使用kubectl 修改容器内部信息**

1 2 3 4 5 6 7 /# cd /u sr/local/ nginx/html/ /usr/ local/nginx/ html/usr/ local/nginx/ html/usr/ local/nginx/ htmlexit

追加的内容已经生效

四、标签查看 1 2 3 4 5 6 7 8 9 10 11 [root@k8s-master ~]# kubectl get pod --show-labels 2 /2 Running 0 14m app= myappmaster ~]# kubectl get pod -l app 2 /2 Running 0 14m master ~]# kubectl get pod -l app= myapp 2 /2 Running 0 15m master ~]# kubectl get pod -l app1 in default namespace

五、查看容器日志 1 2 3 4 5 6 # pod-demo pod名 -c 指定 容器名 [root@k8s-master ~] # kubectl logs pod-demo -c myapp-1 192.168.68.160 - - [20/Dec/2024:14:32:51 +0800] "GET / HTTP/1.1" 200 48 "-" "curl/7.76.1" 192.168.68.160 - - [20/Dec/2024:14:42:31 +0800] "GET / HTTP/1.1" 200 55 "-" "curl/7.76.1"

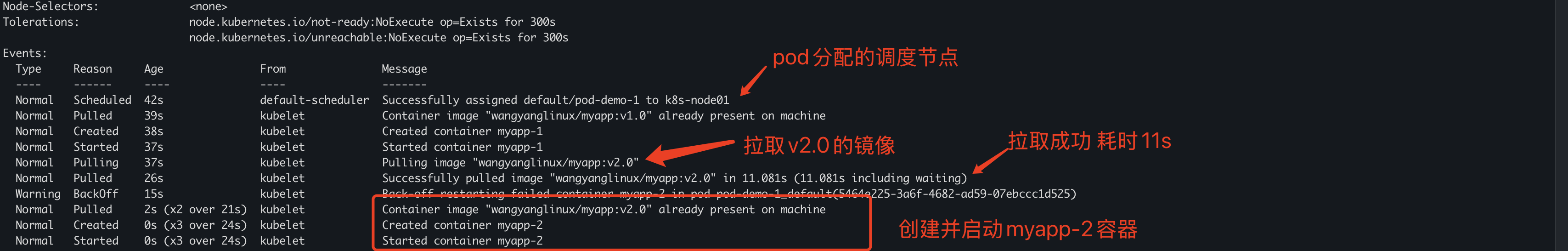

六、查看资源对象详细信息 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@k8s-master ~ ]apiVersion: v1 kind: Pod metadata: name: pod-demo-1 namespace: default labels: app: myapp spec: containers: - name: myapp-1 image: wangyanglinux/myapp:v1.0 - name: myapp-2 image: wangyanglinux/myapp:v2.0 EOF

1 kubectl create -f 2 .pod.yml

1 kubectl discribe pod pod-demo-1

七、Pod的特性 续接6板块 发现pod特性

1 kubectl discribe pod pod-demo-1

1 2 3 4 master ~]# kubectl get pod1 1 /2 CrashLoopBackOff 6 (17s ago) 6m 41s

查看具体的容器日志

1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@k8s-master ~ ]root@k8s-master ~ ]2024 /12/20 15 :17:40 [emerg ] 1 nginx: [emerg ] bind() to 0.0.0.0:80 failed (98: Address in use) 2024 /12/20 15 :17:40 [emerg ] 1 nginx: [emerg ] bind() to 0.0.0.0:80 failed (98: Address in use) 2024 /12/20 15 :17:40 [emerg ] 1 nginx: [emerg ] bind() to 0.0.0.0:80 failed (98: Address in use) 2024 /12/20 15 :17:40 [emerg ] 1 nginx: [emerg ] bind() to 0.0.0.0:80 failed (98: Address in use) 2024 /12/20 15 :17:40 [emerg ] 1 nginx: [emerg ] bind() to 0.0.0.0:80 failed (98: Address in use) 2024 /12/20 15 :17:40 [emerg ] 1 nginx: [emerg ] still could not bind()

1 2 3 # 镜像wangyanglinux/myapp:v1.0 是基于操作系统封装编译安装的nginx # 由此可以得出 同一个pod内部 不同的容器中共享网络站 IPC PID # 当容器1启动后占用80端口 容器2就无法继续使用被占用的80端口

八、常用命令 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 $ kubectl get pod -A , --all-namespaces 查看所有名称空间下的资源-n 指定空间名称,不写默认default--show-labels 查看当前标签-l 筛选资源 key、key=value-o wide 详细信息 分配的节点设备 ip等$ kubectl exec -it podName -c cName -- command-c 可以省略 默认进入容器为一的容器内部$ kubectl explain pod.spec$ kubectl logs podName -c cName-c 可以省略 默认查看容器为一的容器日志 $ kubectl create -f pod.yml$ kubectl delete pod pod-demo $ kubectl delete -f pod.yml $ kubectl describe pod podName

Pod的生命周期 从创建到死亡的完成过程

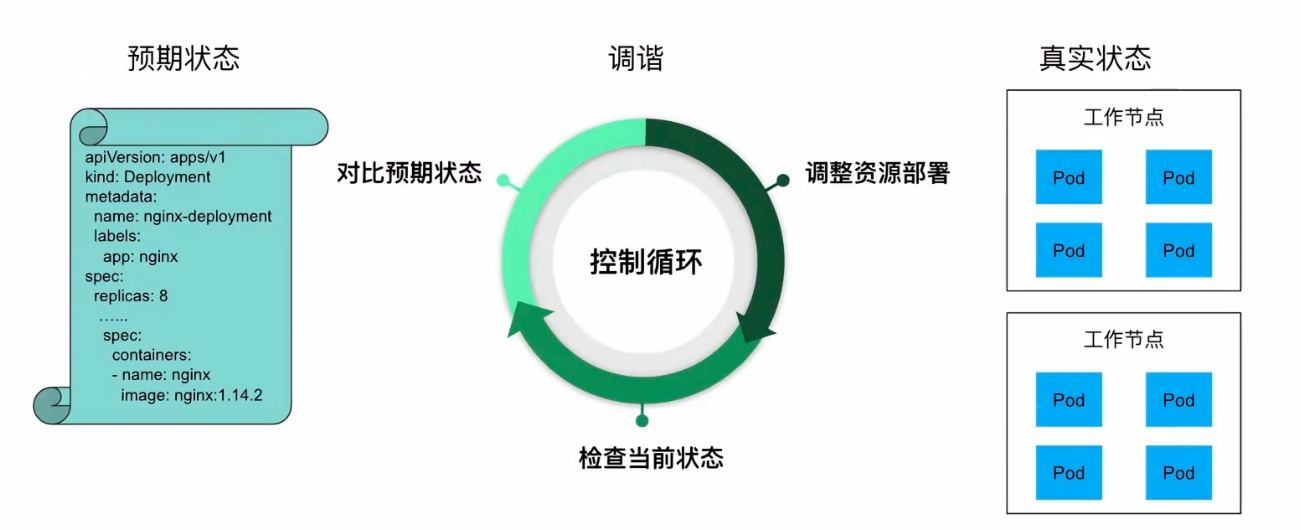

Pod控制器-核心灵魂 1 2 3 4 在Kubernetes中运行了一系列控制器来确保集群的当前状态与期望状态保持一致,它们就是Kubernetes集群内部的管理控制中心或者说是“中心大脑”

一、ReplicationController控制器 1 2 3 4 简写RC 用来确保容器应用的副本数量始终保持在用户定义的副本书,即如果有容器异常退出,会自动创建新的 Pod 来替代;而如果异常多出来的容器也会自动回收;selector 。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 apiVersion: v1 kind: ReplicationController metadata: name: rc-demo spec: replicas: 3 selector: app: rc-demo template: metadata: labels: app: rc-demo spec: containers: - name: rc-demo-container image: wangyanglinux/myapp:v1.0 env: - name: GET_HOSTS_FROM value: dns name: zhangsan value: "123" ports: - containerPort: 80 EOF

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 [root@k8s-master ~ ]apiVersion: v1 kind: ReplicationController metadata: name: rc-demo spec: replicas: 3 selector: app: rc-demo template: metadata: labels: app: rc-demo spec: containers: - name: rc-demo-container image: wangyanglinux/myapp:v1.0 env: - name: GET_HOSTS_FROM value: dns name: zhangsan value: "123" ports: - containerPort: 80 EOF

1 [root@k8s-master ~]# kubectl create -f rc.yml

通过删除 pod 的方式验证

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@k8s-master ~]# kubectl get rc 3 3 3 6m 26s master ~]# kubectl get pod 1 /1 Running 0 6m 32s1 /1 Running 0 6m 32s1 /1 Running 0 6m 32smaster ~]# kubectl delete pod rc-demo-ndx2r "rc-demo-ndx2r" deletedmaster ~]# kubectl get pod 1 /1 Running 0 11s 1 /1 Running 0 7m 28s1 /1 Running 0 7m 28s

通过标签的子集方式验证

1 2 3 4 5 6 7 8 9 10 11 12 [root@k8s-master ~]# kubectl get pod --show-labels # 通过标签查看pod信息app =rc-demoapp =rc-demoapp =rc-demoversion =v1 # 新增rc-demo-ljdhd 这个pod 标签get pod --show-labelsapp =rc-demo,version=v1 # 增加了version =v1的标签,但这个pod的还是rc控制器标签的子集 app =rc-demoapp =rc-demo

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@k8s-master ~]# kubectl get pod --show-labels # 通过标签查看pod信息app =rc-demo,version=v1app =rc-demoapp =rc-demoapp =test --overwrite # 修改app标签为testget pod --show-labels # 通过标签查看pod信息 发现现在是4个podapp =rc-demo # 新建的podapp =rc-demo,version=v1 app =test # app =test 不符合 rc控制器标签的子集 故不在受rc控制器管理app =rc-demo

1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@k8s-master ~]# kubectl get pod --show-labels # 通过标签查看pod信息app =rc-demoapp =rc-demo,version=v1app =testapp =rc-demoapp =rc-demo --overwrite # 修改app标签为rc-demoget pod --show-labels # 通过过标签查看pod信息 发现现在是3个pod app =rc-demo,version=v1app =rc-demoapp =rc-demo

1 2 3 4 5 6 7 由此可见 rc控制器最重要的特性: - 保证副本值与期望值尽可能的一致 - 当然也不可能会完全一致,如果node资源不够无法创建指定数量的pod你那么他会一直的重试,尽可能的达到你期望值的数量 - pod副本数量多出会优先杀死删除最新创建的pod - pod副本数量少会创建新pod - rc控制器通过标签管理pod的数量

通过命令调整rc副本的数量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 [root@k8s-master ~]<none> <none> <none> <none> <none> <none> @k8s-master ~]@k8s-master ~]<none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> @k8s-master ~]

1 2 3 4 5 6 7 8 9 [root@k8s-master ~]# kubectl scale rc rc-demo --replicas= 2 master ~]# kubectl get rc 2 2 2 35m master ~]# kubectl get pod -o wide NODE NOMINATED NODE READINESS GATES1 /1 Running 0 35m 10.244 .85.205 k8s-node01 <none> <none> 1 /1 Running 0 35m 10.244 .58.200 k8s-node02 <none> <none>

二、ReplicaSet 控制器 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 apiVersion: apps/v1 kind: ReplicaSet metadata: name: rs-ml-demo spec: replicas: 3 selector: matchLabels: app: rs-ml-demo domain: rs-t1 template: metadata: labels: app: rs-ml-demo domain: rs-t1 version: v1 spec: containers: - name: rs-ml-demo-container image: wangyanglinux/myapp:v1.0 env: - name: GET_HOSTS_FROM value: dns ports: - containerPort: 80

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 [root@k8s-master ~ ]apiVersion: apps/v1 kind: ReplicaSet metadata: name: rs-ml-demo spec: replicas: 3 selector: matchLabels: app: rs-ml-demo domain: rs-t1 template: metadata: labels: app: rs-ml-demo domain: rs-t1 version: v1 spec: containers: - name: rs-ml-demo-container image: wangyanglinux/myapp:v1.0 env: - name: GET_HOSTS_FROM value: dns ports: - containerPort: 80 EOF

1 [root@k8s-master ~]# kubectl create -f rs.yml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@k8s-master ~]# kubectl get pod 4s hl5 1 /1 Running 0 2m 12s1 /1 Running 0 2m 12s1 /1 Running 0 2m 12smaster ~]# kubectl get rs 3 3 3 2m 57smaster ~]# kubectl delete pod rs-ml-demo-4s hl5 "rs-ml-demo-4shl5" deletedmaster ~]# kubectl get pod 7 bhfg 1 /1 Running 0 12s 1 /1 Running 0 3m 41s1 /1 Running 0 3m 41s

matchExpressions 标签

1 2 3 4 5 6 7 selector.match Expressionsmatch Expressions 字段,可以提供多种选择。label 的值在某个列表中label 的值不在某个列表中label 存在label 不存在

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: apps/v1 kind: ReplicaSet metadata: name: rs-me-exists-demo spec: selector: matchExpressions: - key: app operator: Exists template: metadata: labels: app: spring-k8s spec: containers: - name: rs-me-exists-demo-container image: wangyanglinux/myapp:v1.0 ports: - containerPort: 80

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root@k8s-master ~ ]apiVersion: apps/v1 kind: ReplicaSet metadata: name: rs-me-exists-demo spec: selector: matchExpressions: - key: app operator: Exists template: metadata: labels: app: spring-k8s spec: containers: - name: rs-me-exists-demo-container image: wangyanglinux/myapp:v1.0 ports: - containerPort: 80 EOF

1 kubectl create -f rs-1 .yml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 [root@k8s-master ~]# kubectl get podNAME READY STATUS RESTARTS AGEexists -demo-v245q 1 /1 Running 0 3 m12s-7 bhfg 1 /1 Running 0 23 m1 /1 Running 0 26 m1 /1 Running 0 26 mget rs # 资源控制器未写副本数量 默认为1 NAME DESIRED CURRENT READY AGEexists -demo 1 1 1 5 m45s3 3 3 29 mget pod NAME READY STATUS RESTARTS AGE LABELSexists -demo-v245q 1 /1 Running 0 3 m20s app=spring-k8s # rs exists key匹配创建的 pod-7 bhfg 1 /1 Running 0 23 m app=rs-ml-demo,domain =rs-t1,version=v11 /1 Running 0 26 m app=rs-ml-demo,domain =rs-t1,version=v11 /1 Running 0 26 m app=rs-ml-demo,domain =rs-t1,version=v1get rsNAME DESIRED CURRENT READY AGEexists -demo 1 1 1 3 m46s3 3 3 27 mexists -demo-v245q app=test-1 exists -demo-v245q labeledget pod NAME READY STATUS RESTARTS AGE LABELSexists -demo-v245q 1 /1 Running 0 5 m40s app=test-1 # 发现pod并未被删除-7 bhfg 1 /1 Running 0 25 m app=rs-ml-demo,domain =rs-t1,version=v11 /1 Running 0 29 m app=rs-ml-demo,domain =rs-t1,version=v11 /1 Running 0 29 m app=rs-ml-demo,domain =rs-t1,version=v1

1 2 3 4 5 6 7 8 9 10 11 12 spec: selector: matchExpressions: - key: app operator: Exists template: metadata: labels: app: spring-k8s

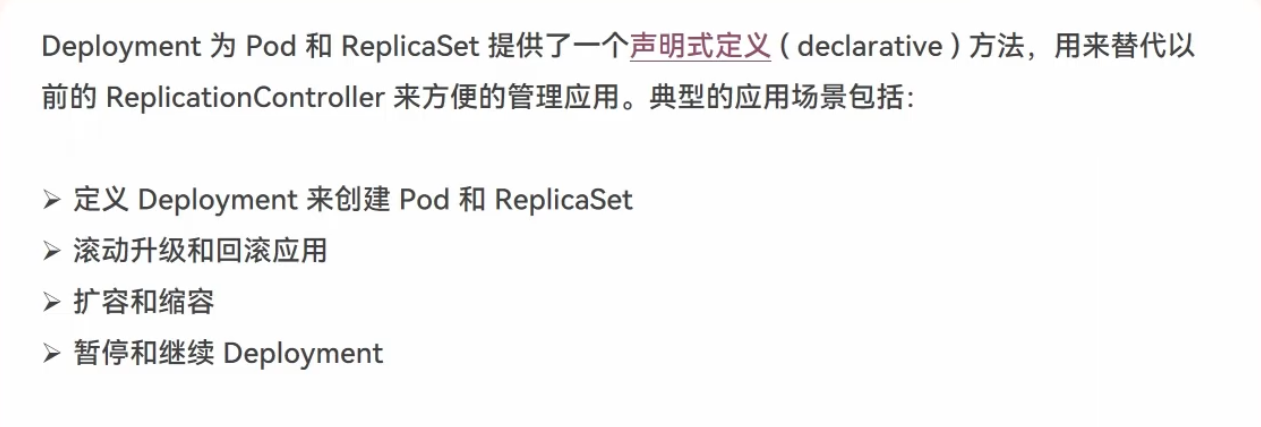

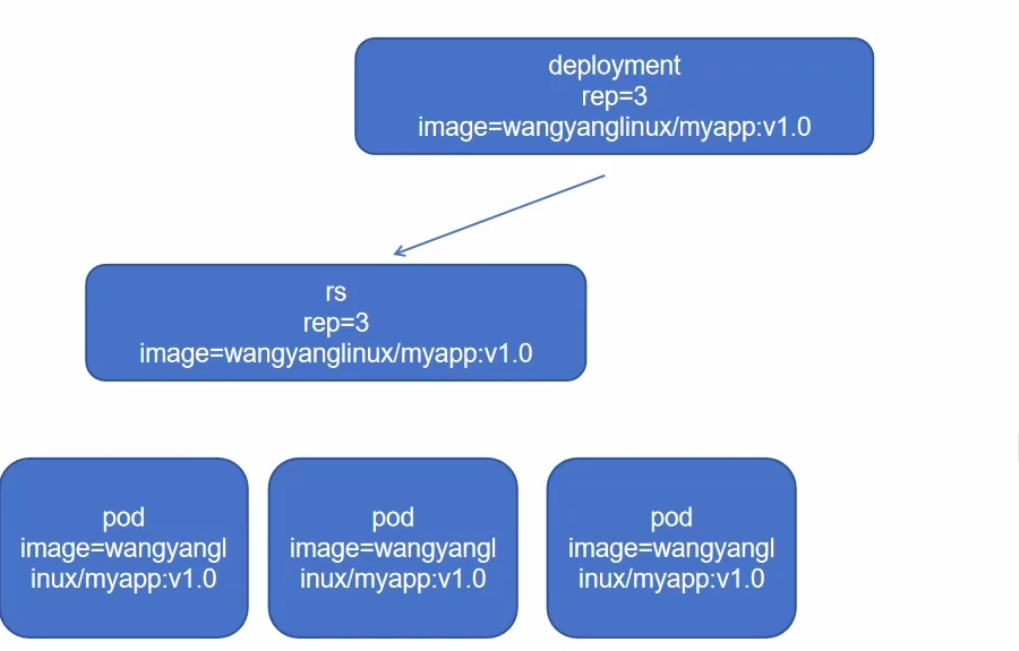

三、Deployment控制器 基本概念

声明式与命令式

1 2 3 声明性的东西是对终结果的陈述,表明意图而不是实现它的过程。在Kubernetes中,这就是说"应该有一个包含三个Pod的ReplicaSet" "创建一个包含三个Pod的ReplicaSet" 。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 replace 与apply 的对比 replace :使用新的配置完全替换掉现有资源的配置。这意味着新配置将覆盖现有资源的所有字段和属性,包括未指定的字段,会导致整个资源的替换apply : 使用新的配置部分地更新现有资源的配置。它会根据提供的配置文件或参数,只更新与新配置中不同的部分,而不会覆盖整个资源的配置字段级别的更新replace : 由于是完全替换,所以会覆盖所有字段和属性,无论是否在新配置中指定apply :只更新与新配置中不同的字段和属性,保留未指定的字段不受影响replace : 不支持部分更新,它会替换整个资源的配置apply : 支持部分更新,只会更新新配置中发生变化的部分,保留未指定的部分不受影响replace : 不考虑其他资源配置的状态,直接替换资源的配置apply : 可以结合使用 -f或 -k 参数,从文件或目录中读取多个资源配置,并根据当前集群中的资源状态进行更新

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 root@k8s-master ~ ]W1224 11 :38:42.790200 874724 helpers.go:704] --dry-run is deprecated and can be replaced with --dry-run=client. root@k8s-master ~ ]apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: myapp name: myapp spec: replicas: 1 selector: matchLabels: app: myapp strategy: {}template: metadata: creationTimestamp: null labels: app: myapp spec: containers: - image: wangyanglinux/myapp:v1.0 name: myapp resources: {}status: {}

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: wangyanglinux/myapp:v1.0 name: deployment-demo-container

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@k8s-master ~ ]apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: wangyanglinux/myapp:v1.0 name: deployment-demo-container EOF

1 2 3 4 5 6 7 8 [root@k8s-master ~]# kubectl apply -f deployment.yml master ~]# kubectl get deploy DATE AVAILABLE AGE1 /1 1 1 30s master ~]# kubectl get pod6995 c75668-5 g8p7 1 /1 Running 0 70s

查看 deployment-demo 控制器存储在etcd数据库的数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 [root@k8s-master ~]# kubectl get deployment deployment-demo -o yaml apiVersion: apps/v1kind: Deploymentmetadata: annotations: "1" { "apiVersion" :"apps/v1" ,"kind" :"Deployment" ,"metadata" :{ "annotations" :{ } ,"labels" :{ "app" :"deployment-demo" } ,"name" :"deployment-demo" ,"namespace" :"default" } ,"spec" :{ "replicas" :1 ,"selector" :{ "matchLabels" :{ "app" :"deployment-demo" } } ,"template" :{ "metadata" :{ "labels" :{ "app" :"deployment-demo" } } ,"spec" :{ "containers" :[{ "image" :"wangyanglinux/myapp:v2.0" ,"name" :"deployment-demo-container" } ]} } } } creationTimestamp: "2024-12-20T12:48:41Z" generation: 1 labels: app: deployment-demo name: deployment-demo namespace: default resourceVersion: "394942" uid: da19be8e-1 d44-4855 -b981-84 b142f681d7spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: deployment-demo strategy: rollingUpdate: maxSurge: 25 % maxUnavailable: 25 % type: RollingUpdate template: metadata: creationTimestamp: null labels: app: deployment-demo spec: containers: .0 imagePullPolicy: IfNotPresent name: deployment-demo-container resources: { } terminationMessagePath: /dev/ termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: { } terminationGracePeriodSeconds: 30 status: availableReplicas: 1 conditions: "2024-12-20T12:48:58Z" lastUpdateTime: "2024-12-20T12:48:58Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available"2024-12-20T12:48:41Z" lastUpdateTime: "2024-12-20T12:48:58Z" message: ReplicaSet "deployment-demo-6465d4c5c9" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 1 replicas: 1 updatedReplicas: 1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 @k8s-master ~]@k8s-master ~]<none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> @k8s-master ~] hello MyAPP | version v1.0

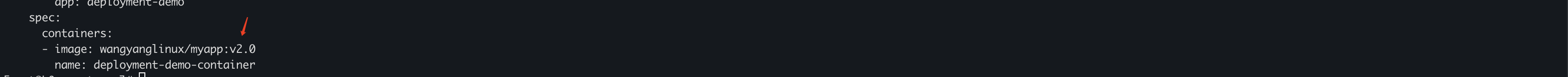

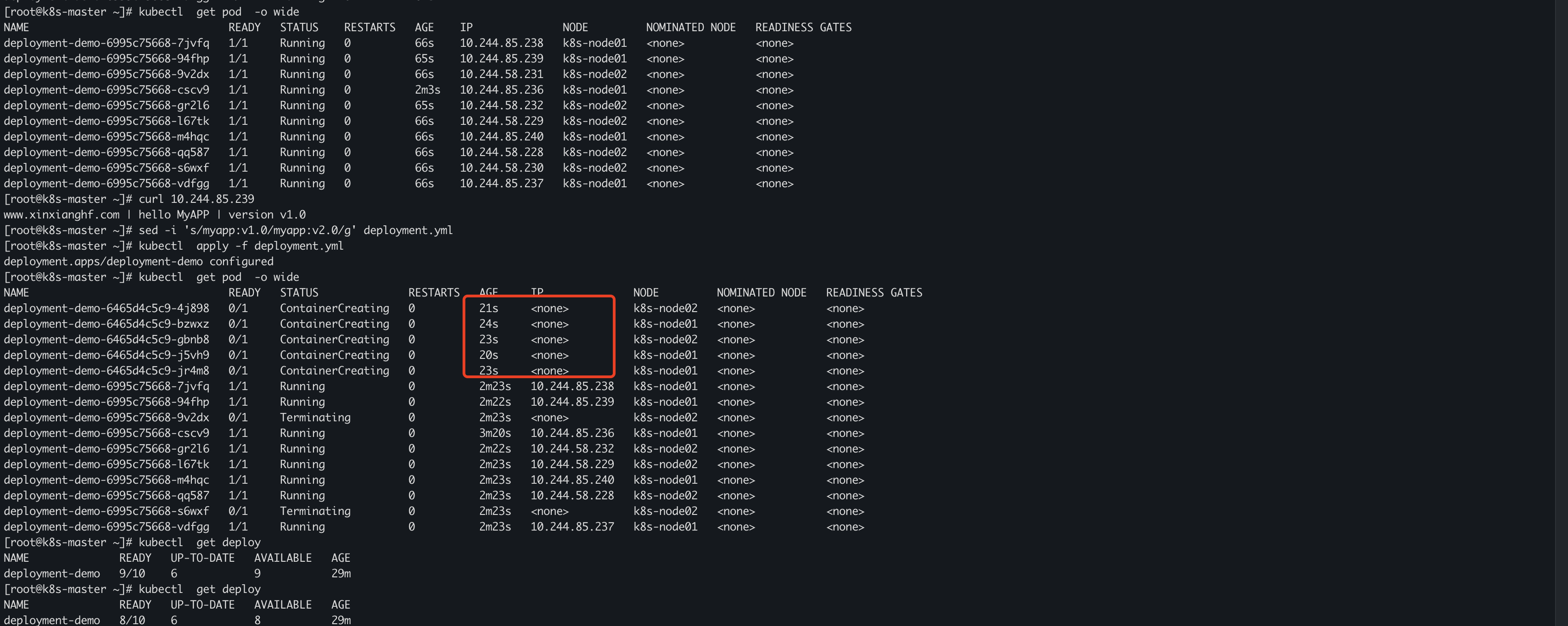

版本升级 把镜像版本改为v2.0

1 [root@k8s -master ~]# sed -i 's/myapp:v1.0/myapp:v2.0/g' deployment.yml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@k8s-master ~]@k8s-master ~]<none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> <none> @k8s-master ~] hello MyAPP | version v2.0

1 2 3 更新的过程中也发现一个新的特性,更新流程 先创建新版本的pod runing 后才删除旧版本的podapply :只改变你声明的跟现在运行状态不相符的部分 其他不做改变 例如通过scale 重新定义deployment-demo 副本的数量为10 ,通过apply 重新更新的pod数量还是为10 个

通过 replace 构建资源

1 2 3 4 5 6 7 8 9 10 11 [root@k8s-master ~]# sed -i 's/myapp:v2.0 /myapp:v3.0 /g' deployment.yml master ~]# kubectl replace -f deployment.yml master ~]# kubectl get pod -o wide NODE NOMINATED NODE READINESS GATES7 dbccb74d6-twd84 1 /1 Running 0 111s 10.244 .85.246 k8s-node01 <none> <none> master ~]# kubectl get deployment DATE AVAILABLE AGE1 /1 1 1 40m master ~]# curl 10.244 .85.246 version v3.0

1 2 3 得出结论replace :会根据 deployment.yml 完全重建,完全覆盖 并不是更新与先版本不一致的地方1 是因为deployment.yml 没有写副本数量,即默认为1 故此通过replace 创建的pod的数量也为1

通过diff 对比当前现有deployment.yml的资源清单与已经创建的运行的资源对象做对比查询是否有区别

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 [root@k8s-master ~]# kubectl diff -f deployment.yml # 这里无返回结果 说明创建的资源与yml一致@@ -4,7 +4,7 @@ - generation: 9 + generation: 10 @@ -30,7 +30,7 @@ - - image: wangyanglinux/myapp:v3.0 # 区别如下 删除了3.0版本的这一行 + - image: wangyanglinux/myapp:v4.0 # 新增了4.0版本的这行

1 tips: 正式的生产环境中 可以使用diff 命令检测这个资源清单文件是否已经应用过,无反馈结果就是已经应用,有返回不同的地方说明这个资源清单未被重新应用升级。

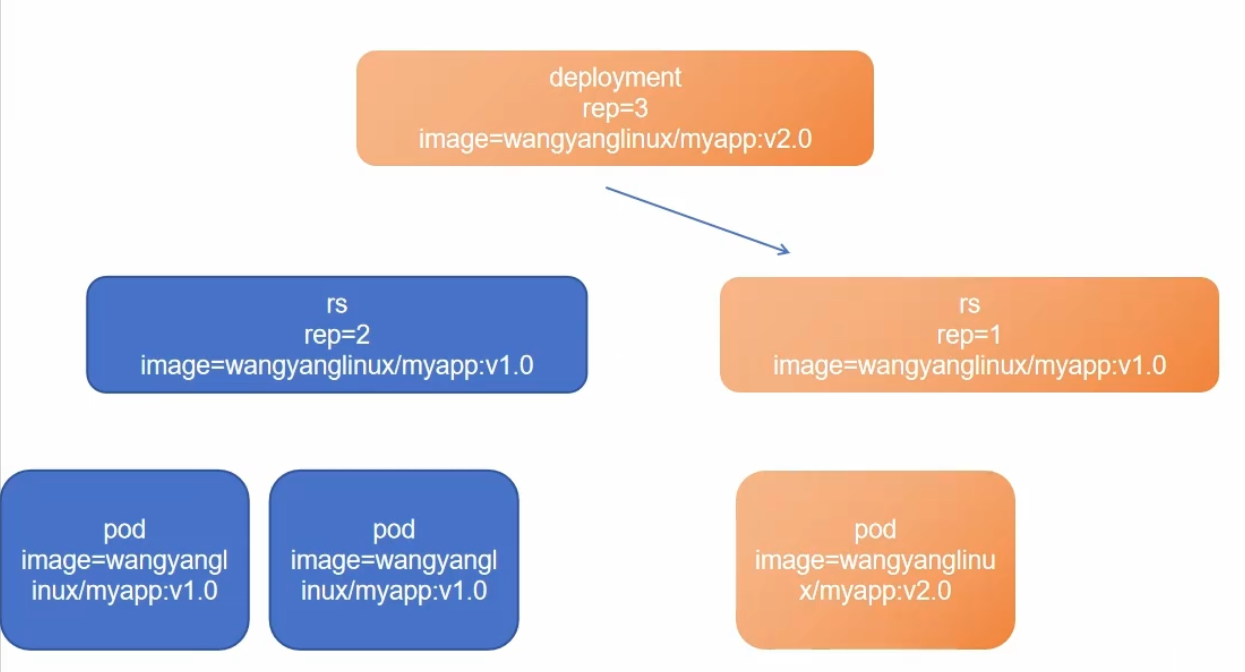

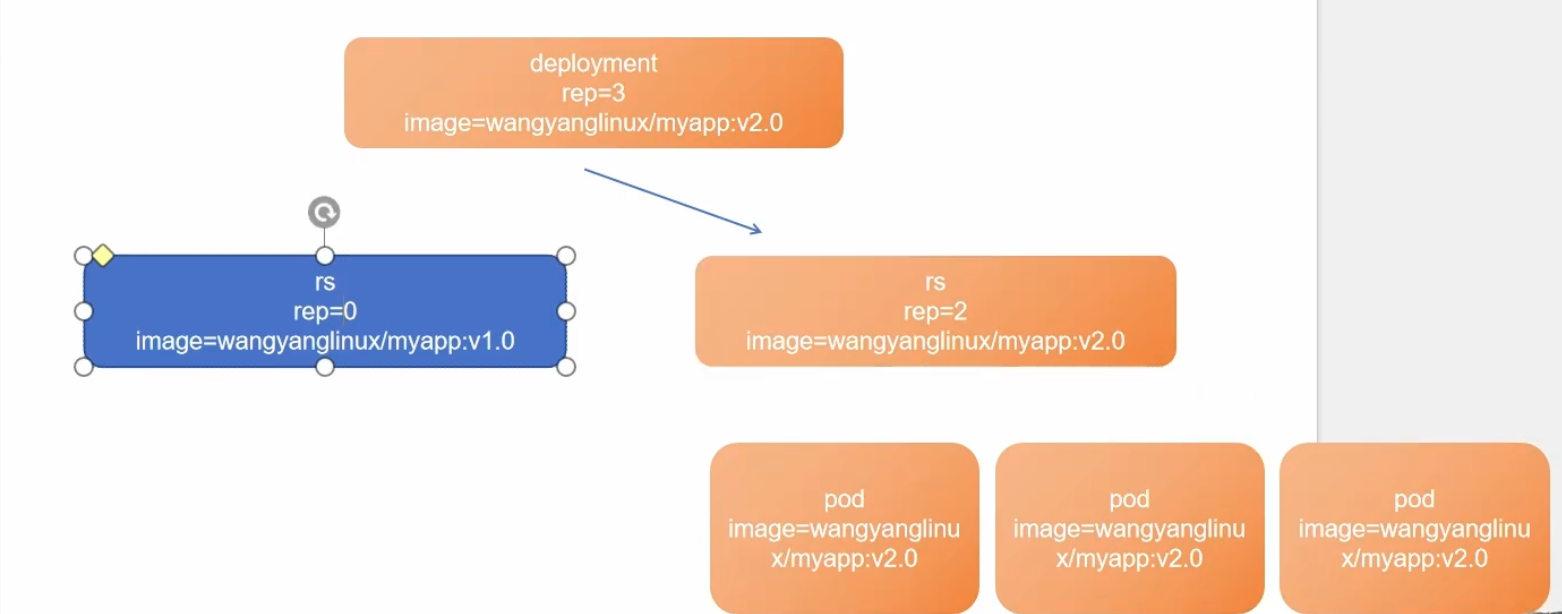

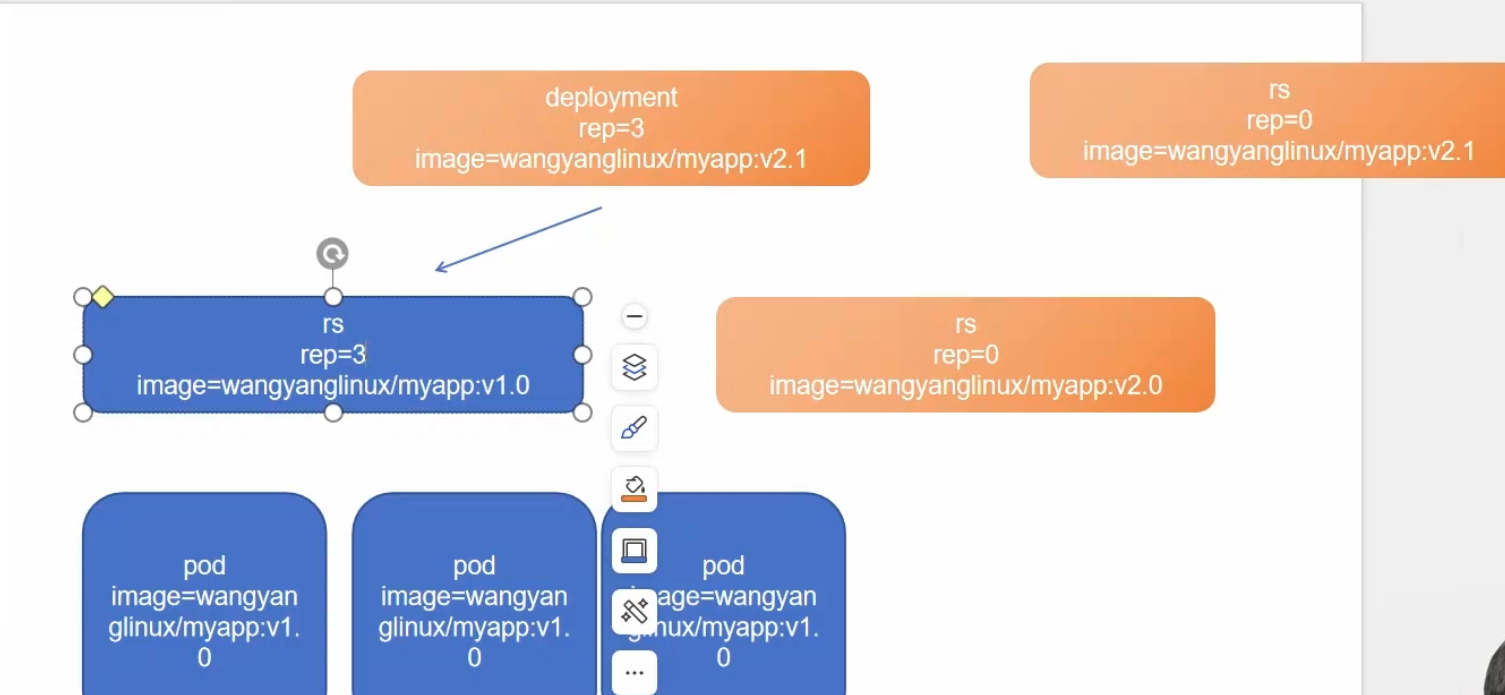

3.1 滚动更新 1 2 deployment 资源管理pod 滚动更新的过程v1 版本的pod数量为3 控制器会先杀死一个pod,并且通过deployment控制器会操作rs控制器生产v2 版本的pod。保障服务的不间断访问,逐步迁移所有pod为v2 版本的pod

3.2 回滚降级 1 在 deployment 滚动升级完成后 原v1 版本的 rs控制器的资源清单不会被删除 而是存储在etcd数据库中

1 2 逐步删除v2 版本的rs控制器的pod 通过v1 版本的rs 生成v1 版本的pod, v2 .1 和上面滚动升级同理,创建v2 .1 的rs控制器 逐步替换v1 的pod

3.3 Kubectl create、apply、replace 的区别 1 2 3 create 是一种命令式的表达方式 根据资源清单创建资源apply 是一种声明式的表达方式 根据资源清单更新不同replace 是一种命令式的表达方式 根据资源清单删除原有资源重新创建资源

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 master ~]# cat deployment-1 .ymlspec :spec :0 master ~]# kubectl create -f deployment-1 .ymlmaster ~]# kubectl get pod -o wideNODE NOMINATED NODE READINESS GATES6995 c75668-d7t5j 1 /1 Running 0 29s 10.244 .85.247 k8s-node01 <none> <none> master ~]# curl 10.244 .85.247 version v1.0 master ~]# sed -i 's/myapp:v1.0 /myapp:v2.0 /' deployment-1 .ymlmaster ~]# kubectl create -f deployment-1 .yml "deployment-1.yml" : deployments.apps "deployment-demo" already exists

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 master ~]# kubectl delete -f deployment-1 .yml"deployment-demo" deletedmaster ~]# kubectl get pod -o widein default namespace.master ~]# kubectl apply -f deployment-1 .ymlmaster ~]# kubectl get pod -o wideNODE NOMINATED NODE READINESS GATES6465 d4c5c9-625 rx 1 /1 Running 0 67s 10.244 .85.248 k8s-node01 <none> <none> master ~]# curl 10.244 .85.248 version v2.0 master ~]# sed -i 's/myapp:v2.0 /myapp:v3.0 /' deployment-1 .ymlmaster ~]# kubectl apply -f deployment-1 .yml master ~]# kubectl get pod -o wideNODE NOMINATED NODE READINESS GATES7 dbccb74d6-brnjv 1 /1 Running 0 2m 39s 10.244 .58.240 k8s-node02 <none> <none> master ~]# curl 10.244 .58.240 version v3.0

1 2 3 4 5 6 7 8 9 10 11 12 13 master ~]# sed -i 's/myapp:v3.0 /myapp:v2.0 /' deployment-1 .ymlmaster ~]# kubectl replace -f deployment-1 .yml master ~]# kubectl get pod -o wideNODE NOMINATED NODE READINESS GATES6465 d4c5c9-gwntz 0 /1 ContainerCreating 0 3s <none> k8s-node01 <none> <none> 7 dbccb74d6-brnjv 1 /1 Running 0 4m 26s 10.244 .58.240 k8s-node02 <none> <none> master ~]# kubectl get pod -o wideNODE NOMINATED NODE READINESS GATES6465 d4c5c9-gwntz 1 /1 Running 0 25s 10.244 .85.249 k8s-node01 <none> <none> master ~]# curl 10.244 .85.249 version v2.0

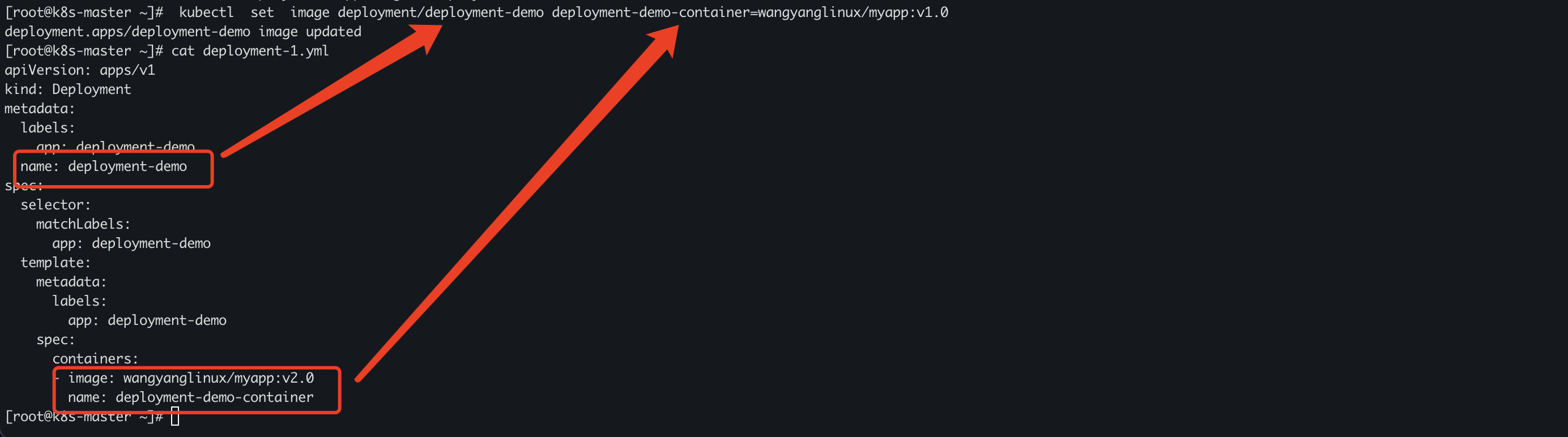

3.4 Deployment 常用命令 1 2 3 4 5 6 7 8 9 10 11 12 13 master ~]# kubectl create -f deployment.yml --recordmaster ~]# kubectl scale deployment nginx-deployment --replicas= 10 master ~]# kubectl autoscale deployment nginx-deployment --min= 10 --max= 15 --cpu-percent= 80 master ~]# kubectl set image deployment/deployment-demo deployment-demo-container= wangyanglinux/myapp:v1.0

3.5 滚动更新实验 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 deployment-demo-6995c75668-qn8jv 0/1 ContainerCreating 0 2s@k8s-master ~]@k8s-master ~]@k8s-master ~]@k8s-master ~]@k8s-master ~]@k8s-master ~]<none> 80/TCP 5s<none> 443/TCP 3d3h@k8s-master ~] hello MyAPP | version v1.0 # 再开一个终端循环访问集群的ip [root@k8s-master ~]# while true; do curl 10.2.163.19 && sleep 1 ; done # 循环访问负载集群的ip www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 # 回到原终端更新pod的容器镜像版本 [root@k8s-master ~]# kubectl set image deployment/deployment-demo deployment-demo-container=wangyanglinux/myapp:v2.0 deployment.apps/deployment-demo image updated [root@k8s-master ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deployment-demo-6465d4c5c9-2xxnq 0/1 ContainerCreating 0 4s <none> k8s-node01 <none> <none> deployment-demo-6465d4c5c9-6gdff 1/1 Running 0 18s 10.244.85.254 k8s-node01 <none> <none> deployment-demo-6465d4c5c9-6zspk 1/1 Running 0 17s 10.244.85.255 k8s-node01 <none> <none> deployment-demo-6465d4c5c9-n4fb5 1/1 Running 0 17s 10.244.58.243 k8s-node02 <none> <none> deployment-demo-6465d4c5c9-w748p 0/1 ContainerCreating 0 6s <none> k8s-node02 <none> <none> deployment-demo-6995c75668-25gxf 1/1 Terminating 0 8m44s 10.244.58.242 k8s-node02 <none> <none> deployment-demo-6995c75668-2tlhq 1/1 Terminating 0 8m44s 10.244.85.252 k8s-node01 <none> <none> deployment-demo-6995c75668-kdrx8 1/1 Terminating 0 9m37s 10.244.85.251 k8s-node01 <none> <none> deployment-demo-6995c75668-qn8jv 1/1 Running 0 8m44s 10.244.58.241 k8s-node02 <none> <none> [root@k8s-master ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deployment-demo-6465d4c5c9-2xxnq 1/1 Running 0 27s 10.244.85.192 k8s-node01 <none> <none> deployment-demo-6465d4c5c9-6gdff 1/1 Running 0 41s 10.244.85.254 k8s-node01 <none> <none> deployment-demo-6465d4c5c9-6zspk 1/1 Running 0 40s 10.244.85.255 k8s-node01 <none> <none> deployment-demo-6465d4c5c9-n4fb5 1/1 Running 0 40s 10.244.58.243 k8s-node02 <none> <none> deployment-demo-6465d4c5c9-w748p 1/1 Running 0 29s 10.244.58.244 k8s-node02 <none> <none> # 回到循环访问集群的ip的终端, 可以发现v1版本逐渐与v2版本交替访问,直到完全被v2版本取代,这就是滚动升级的过程 [root@k8s-master ~]# while true; do curl 10.2.163.19 && sleep 1 ; done www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v1.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0 www.xinxianghf.com | hello MyAPP | version v2.0

3.6 Deployment-金丝雀部署 1 2 3 4 5 6 7 8 # 续接3.5 滚动更新的实验,先删除deployment控制器创建的pod,保留svcdelete -f deployment.ymlget podNo resources found in default namespace.get svcNAME TYPE CLUSTER -IP EXTERNAL -IP PORT(S) AGE10.2 .163 .19 <none > 80 /TCP 20 h10.0 .0 .1 <none > 443 /TCP 3 d23h

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@k8s-master ~ ]apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: replicas: 10 selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: wangyanglinux/myapp:v1.0 name: deployment-demo-container

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 [root@k8s-master ~]# kubectl apply -f deployment.yml # 创建deploy控制器 # kubectl get pod -6995 c75668-crh9g 1 /1 Running 0 80 s-6995 c75668-fk2fj 1 /1 Running 0 80 s-6995 c75668-glzph 1 /1 Running 0 80 s-6995 c75668-ln92q 1 /1 Running 0 81 s-6995 c75668-lq76f 1 /1 Running 0 81 s-6995 c75668-m4nmn 1 /1 Running 0 80 s-6995 c75668-q5969 1 /1 Running 0 80 s-6995 c75668-rfpl5 1 /1 Running 0 81 s-6995 c75668-th6jv 1 /1 Running 0 80 s-6995 c75668-z2tl5 1 /1 Running 0 80 s# kubectl get svc 10.2 .163 .19 <none> 80 /TCP 20 h10.0 .0 .1 <none> 443 /TCP 4 d# kubectl get deployment deployment-demo -o yaml # 把deployment-demo控制器输出为资源清单 apiVersion: apps/v1kind: Deploymentmetadata: annotations: "1" { "apiVersion" :"apps/v1" ,"kind" :"Deployment" ,"metadata" :{ "annotations" :{ } ,"labels" :{ "app" :"deployment-demo" } ,"name" :"deployment-demo" ,"namespace" :"default" } ,"spec" :{ "replicas" :10 ,"selector" :{ "matchLabels" :{ "app" :"deployment-demo" } } ,"template" :{ "metadata" :{ "labels" :{ "app" :"deployment-demo" } } ,"spec" :{ "containers" :[{ "image" :"wangyanglinux/myapp:v1.0" ,"name" :"deployment-demo-container" } ]} } } } creationTimestamp: "2024-12-21T11:13:06Z" generation: 1 labels: app: deployment-demo name: deployment-demo namespace: default resourceVersion: "516213" uid: 6 a0e236a-c726-46 cd-a5f0-4 a6411d51297spec: progressDeadlineSeconds: 600 replicas: 10 revisionHistoryLimit: 10 selector: matchLabels: app: deployment-demo strategy: rollingUpdate: # 更新模式为滚动更新 maxSurge: 25 % # 滚动更新允许创建超过pod副本的数量 这里是总副本数量的25% maxUnavailable: 25 % # 更新时pod最多不可用副本的数量 这里是总副本数量的25% type: RollingUpdate template: metadata: creationTimestamp: null labels: app: deployment-demo spec: containers: .0 imagePullPolicy: IfNotPresent name: deployment-demo-container resources: { } terminationMessagePath: /dev/ termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: { } terminationGracePeriodSeconds: 30 status: availableReplicas: 10 conditions: "2024-12-21T11:14:01Z" lastUpdateTime: "2024-12-21T11:14:01Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available"2024-12-21T11:13:06Z" lastUpdateTime: "2024-12-21T11:14:06Z" message: ReplicaSet "deployment-demo-6995c75668" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 10 replicas: 10 updatedReplicas: 10

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 # 配置deployment-demo控制器的滚动更新策略为创建新pod版本的数量为1,不可用pod副本的数量为0 - master ~]# kubectl patch deployment deployment-demo -p '{"spec" :{"strategy" : {"rollingUpdate" : {"maxSurge" : 1 , "maxUnavailable" : 0 }}}}'2024-12-21 T11:13:06Z"517361 "6411 d5129 72024-12-21 T11:14:01Z"2024-12-21 T11:14:01Z"2024-12-21 T11:13:06Z"2024-12-21 T11:14:06Z"6995 c7566 8" has successfully progressed."spec" : {"template" : {"spec" : {"containers" : [{"name" : "deployment-demo-container" , "image" : "wangyanglinux/myapp:v2.0" }]}}}}' # 触发滚动更新把镜像改为2.0版本 "spec" : {"template" : {"spec" : {"containers" : [{"name" : "deployment-demo-container" , "image" : "wangyanglinux/myapp:v2.0" }]}}}}' && kubectl rollout pause deployment deployment-demo6465 d4c5c9-jsnsl 0/1 ContainerCreating 0 7s # 也就是这个最新创建的6995 c7566 8-crh9g 1/1 Running 0 22m6995 c7566 8-fk2fj 1/1 Running 0 22m6995 c7566 8-glzph 1/1 Running 0 22m6995 c7566 8-ln92q 1/1 Running 0 22m6995 c7566 8-lq76f 1/1 Running 0 22m6995 c7566 8-m4nmn 1/1 Running 0 22m6995 c7566 8-q5969 1/1 Running 0 22m6995 c7566 8-rfpl5 1/1 Running 0 22m6995 c7566 8-th6jv 1/1 Running 0 22m6995 c7566 8-z2tl5 1/1 Running 0 22m 6465 d4c5c9-jsnsl 1/1 Running 0 2m25s6995 c7566 8-crh9g 1/1 Running 0 25m6995 c7566 8-fk2fj 1/1 Running 0 25m6995 c7566 8-glzph 1/1 Running 0 25m6995 c7566 8-ln92q 1/1 Running 0 25m6995 c7566 8-lq76f 1/1 Running 0 25m6995 c7566 8-m4nmn 1/1 Running 0 25m6995 c7566 8-q5969 1/1 Running 0 25m6995 c7566 8-rfpl5 1/1 Running 0 25m6995 c7566 8-th6jv 1/1 Running 0 25m6995 c7566 8-z2tl5 1/1 Running 0 25m6465 d4c5c9-jsnsl 1/1 Running 0 8m6465 d4c5c9-sr8p7 0/1 Pending 0 2s6995 c7566 8-crh9g 1/1 Running 0 30m6995 c7566 8-fk2fj 1/1 Running 0 30m6995 c7566 8-glzph 1/1 Running 0 30m6995 c7566 8-ln92q 1/1 Terminating 0 30m6995 c7566 8-lq76f 1/1 Running 0 30m6995 c7566 8-m4nmn 1/1 Running 0 30m6995 c7566 8-q5969 1/1 Running 0 30m6995 c7566 8-rfpl5 1/1 Running 0 30m6995 c7566 8-th6jv 1/1 Running 0 30m6995 c7566 8-z2tl5 1/1 Running 0 30m6465 d4c5c9-jsnsl 1/1 Running 0 8m4s6465 d4c5c9-sr8p7 0/1 ContainerCreating 0 6s6995 c7566 8-crh9g 1/1 Running 0 30m6995 c7566 8-fk2fj 1/1 Running 0 30m6995 c7566 8-glzph 1/1 Running 0 30m6995 c7566 8-ln92q 1/1 Terminating 0 30m6995 c7566 8-lq76f 1/1 Running 0 30m6995 c7566 8-m4nmn 1/1 Running 0 30m6995 c7566 8-q5969 1/1 Running 0 30m6995 c7566 8-rfpl5 1/1 Running 0 30m6995 c7566 8-th6jv 1/1 Running 0 30m6995 c7566 8-z2tl5 1/1 Running 0 30m6465 d4c5c9-bxmwn 1/1 Running 0 8m52s6465 d4c5c9-dt6xg 1/1 Running 0 8m17s6465 d4c5c9-hx252 1/1 Running 0 10m6465 d4c5c9-jsnsl 1/1 Running 0 18m6465 d4c5c9-l4kwg 1/1 Running 0 9m42s6465 d4c5c9-mhm6s 1/1 Running 0 10m6465 d4c5c9-nzwgb 1/1 Running 0 9m8s6465 d4c5c9-rn8w2 1/1 Running 0 8m35s6465 d4c5c9-sr8p7 1/1 Running 0 10m6465 d4c5c9-tgtqh 1/1 Running 0 9m25s6995 c7566 8-zhb75 0/1 ContainerCreating 0 9s # 可以看到回滚到v1的pod被创建了6995 c7566 8-25glq 1/1 Running 0 2m22s6995 c7566 8-2tfx5 1/1 Running 0 105s6995 c7566 8-62m2m 1/1 Running 0 73s6995 c7566 8-bmz4m 1/1 Running 0 2m4s6995 c7566 8-mcnq5 1/1 Running 0 41s6995 c7566 8-tjm65 1/1 Running 0 57s6995 c7566 8-v9h8m 1/1 Running 0 90s6995 c7566 8-vggmm 1/1 Running 0 2m39s6995 c7566 8-x4h8p 1/1 Running 0 23s6995 c7566 8-zhb75 1/1 Running 0 2m50s6465 d4c5c9 0 0 0 23m # 根据上面的实验得知 这个pod为0的就是v2版6995 c7566 8 10 10 10 46m # 根据上面的实验得知 这个pod为10的就是v1版

1 2 3 生产环境中,滚动升级的幅度不建议超过25 % ,也就是同时创建的pod数量不超过当前副本数量的25 % 以免造成业务的不稳定性。 0 可以有效的避免同时删除大量pod线上用户大批量的连接中断给用户造成不好的体验。

3.7 清理策略 1 Deployment 创建资源清单后,进行版本回退以及版本更新时都会创建rs控制器并存储在etcd数据库中

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 root@k8s-master ~ ]apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: replicas: 2 selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: wangyanglinux/myapp:v1.0 name: deployment-demo-container root@k8s-master ~ ]deployment.apps/deployment-demo created root@k8s-master ~ ]NAME READY STATUS RESTARTS AGE deployment-demo-6995c75668-58mrm 1 /1 Running 0 13s deployment-demo-6995c75668-pqzzb 1 /1 Running 0 12s root@k8s-master ~ ]NAME DESIRED CURRENT READY AGE deployment-demo-6995c75668 2 2 2 65s root@k8s-master ~ ]root@k8s-master ~ ]apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: replicas: 2 selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: wangyanglinux/myapp:v2.0 name: deployment-demo-container root@k8s-master ~ ]deployment.apps/deployment-demo configured root@k8s-master ~ ]NAME READY STATUS RESTARTS AGE deployment-demo-6465d4c5c9-2lnlc 1 /1 Running 0 22s deployment-demo-6465d4c5c9-688kr 1 /1 Running 0 15s root@k8s-master ~ ]NAME DESIRED CURRENT READY AGE deployment-demo-6465d4c5c9 2 2 2 108s deployment-demo-6995c75668 0 0 0 5m49s root@k8s-master ~ ]NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE deployment-demo ClusterIP 10.2 .163 .19 <none> 80 /TCP 3d12h kubernetes ClusterIP 10.0 .0 .1 <none> 443 /TCP 6d16h root@k8s-master ~ ]www.xinxianghf.com | hello MyAPP | version v2.0 root@k8s-master ~ ]deployment.apps "deployment-demo" deleted root@k8s-master ~ ]No resources found in default namespace. root@k8s-master ~ ]No resources found in default namespace. root@k8s-master ~ ]apiVersion: apps/v1 kind: Deployment metadata: labels: app: deployment-demo name: deployment-demo spec: revisionHistoryLimit: 0 replicas: 2 selector: matchLabels: app: deployment-demo template: metadata: labels: app: deployment-demo spec: containers: - image: wangyanglinux/myapp:v2.0 name: deployment-demo-container root@k8s-master ~ ]deployment.apps/deployment-demo created root@k8s-master ~ ]NAME READY STATUS RESTARTS AGE deployment-demo-6465d4c5c9-8zghd 1 /1 Running 0 25s deployment-demo-6465d4c5c9-qw7x6 1 /1 Running 0 25s root@k8s-master ~ ]root@k8s-master ~ ]deployment.apps/deployment-demo configured root@k8s-master ~ ]NAME READY STATUS RESTARTS AGE deployment-demo-7dbccb74d6-trbws 1 /1 Running 0 62s deployment-demo-7dbccb74d6-xshg9 1 /1 Running 0 54s root@k8s-master ~ ]www.xinxianghf.com | hello MyAPP | version v3.0 root@k8s-master ~ ]NAME DESIRED CURRENT READY AGE deployment-demo-7dbccb74d6 2 2 2 19s

1 2 3 4 5 6 7 8 9 master ~]# kubectl delete deployment --all"deployment-demo" deletedmaster ~]# kubectl get svcTYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE10.2 .163.19 <none> 80 /TCP 3 d12h10.0 .0.1 <none> 443 /TCP 6 d16hmaster ~]# kubectl delete svc deployment-demo"deployment-demo" deleted

四、DaemonSet 控制器 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 root@k8s-master ~ ]apiVersion: apps/v1 kind: DaemonSet metadata: name: daemonset-demo labels: app: daemonset-demo spec: selector: matchLabels: name: daemonset-demo template: metadata: labels: name: daemonset-demo spec: containers: - name: daemonset-demo-container image: wangyanglinux/myapp:v1.0 EOF root@k8s-master ~ ]daemonset.apps/daemonset-demo created root@k8s-master ~ ]NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES daemonset-demo-pc97q 1 /1 Running 0 12s 10.244 .58 .196 k8s-node02 <none> <none> daemonset-demo-t8blw 1 /1 Running 0 12s 10.244 .85 .221 k8s-node01 <none> <none> root@k8s-master ~ ]

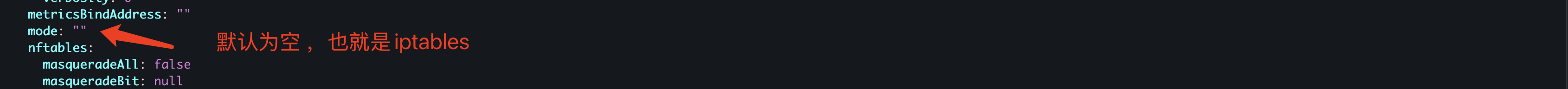

七、Service控制器 修改kube-proxy 工作模式

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 [root@k8s - master ~ ]# kubectl edit configmap kube- proxy - n kube- system @k8s - master ~ ]# kubectl get pod - l k8s- app= kube- proxy - A # 查询 kube- proxy 标签的pod - system kube- proxy-86 hs7 1 / 1 Running 3 (27 h ago) 6 d22h- system kube- proxy- mhzph 1 / 1 Running 1 (27 h ago) 6 d22h- system kube- proxy- vjfsw 1 / 1 Running 6 (27 h ago) 6 d22h@k8s - master ~ ]# kubectl delete pod - n kube- system - l k8s- app= kube- proxyRunning 说明成功@k8s - master ~ ]# kubectl get pod - l k8s- app= kube- proxy - A- system kube- proxy- c6fht 1 / 1 Running 0 2 m49s- system kube- proxy- gs7gw 1 / 1 Running 0 2 m48s- system kube- proxy- jcs4b 1 / 1 Running 0 2 m49s

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 readinessProbe: httpGet: port: 80 path: /index1.html initialDelaySeconds: 1 periodSeconds: 3 ------------- apiVersion: v1 kind: Service metadata: name: myapp-clusterip namespace: default spec: type: ClusterIP selector: app: myapp release: stabel svc: clusterip ports: - name: http port: 80 targetPort: 80

7.1 Clusterip类型 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 [root@k8s-master ~]# cat >> myapp-clusterip-deploy.yaml <EOF apiVersion: apps/v1kind: Deploymentmetadata: name: myapp-clusterip-deploy namespace: defaultspec: replicas: 3 selector: matchLabels: app: myapp release: stabel svc: clusterip template: metadata: labels: app: myapp release: stabel env: test svc: clusterip spec: containers: image: wangyanglinux/myapp:v1.0 imagePullPolicy: IfNotPresent ports: containerPort: 80 readinessProbe: httpGet: port: 80 path: /index1.html initialDelaySeconds: 1 periodSeconds: 3 # kubectl apply -f myapp-clusterip-deploy.yaml # kubectl get pod # 可以看就绪状态是非就绪 -5 c9cc9b64-2 ssbv 0 /1 Running 0 41 s-5 c9cc9b64-pf6b7 0 /1 Running 0 41 s-5 c9cc9b64-pg4ww 0 /1 Running 0 41 s# 创建svc控制器资源 # cat >> myapp-service.yaml <<EOF apiVersion: v1kind: Servicemetadata: name: myapp-clusterip namespace: defaultspec: type: ClusterIP selector: app: myapp release: stabel svc: clusterip ports: port: 80 targetPort: 80 # kubectl apply -f myapp-service.yaml # kubectl get svc 10.0 .0 .1 <none> 443 /TCP 6 d21h10.0 .192 .93 <none> 80 /TCP 7 s# curl 10.0.192.93 # 访问这个svc ip发现无法访问 curl: (7 ) Failed to connect to 10.0 .192 .93 port 80 : Connection refused# 查看pod标签以及svc标签,发现pod的标签是svc标签的子集 # kubectl get pod --show-labels -5 c9cc9b64-2 ssbv 0 /1 Running 0 8 m24s app = myapp,env = test,pod-template-hash = 5 c9cc9b64,release = stabel,svc = clusterip-5 c9cc9b64-pf6b7 0 /1 Running 0 8 m24s app = myapp,env = test,pod-template-hash = 5 c9cc9b64,release = stabel,svc = clusterip-5 c9cc9b64-pg4ww 0 /1 Running 0 8 m24s app = myapp,env = test,pod-template-hash = 5 c9cc9b64,release = stabel,svc = clusterip# kubectl get svc -o wide 10.0 .0 .1 <none> 443 /TCP 6 d21h <none> 10.0 .192 .93 <none> 80 /TCP 3 m20s app = myapp,release = stabel,svc = clusterip# 创建就绪检测文件 # kubectl exec -it myapp-clusterip-deploy-5c9cc9b64-2ssbv -- /bin/bash # 进入pod中 -5 c9cc9b64-2 ssbv:/# cd /usr/local/nginx/html/ # 把当前时间重定向到index1.html文件中 -5 c9cc9b64-2 ssbv:/usr/ local/nginx/ html# date > index1.html # kubectl get pod # myapp-clusterip-deploy-5c9cc9b64-2ssbv 这个pod已经就绪 NAME READY STATUS RESTARTS AGE -5 c9cc9b64-2 ssbv 1 /1 Running 0 11 m-5 c9cc9b64-pf6b7 0 /1 Running 0 11 m-5 c9cc9b64-pg4ww 0 /1 Running 0 11 m# curl 10.0.192.93 # 发现也可以访问 .0 # curl 10.0.192.93/hostname.html # 访问打印pod名的问题,发现只能访问到就绪pod -5 c9cc9b64-2 ssbv# 在创建一个就绪文件 # kubectl exec -it myapp-clusterip-deploy-5c9cc9b64-pf6b7 -- /bin/bash -5 c9cc9b64-pf6b7:/# date > /usr/local/nginx/html/index1.html -5 c9cc9b64-pf6b7:/# exit # kubectl get pod # 发现已经两个pod就绪 -5 c9cc9b64-2 ssbv 1 /1 Running 0 19 m-5 c9cc9b64-pf6b7 1 /1 Running 0 19 m-5 c9cc9b64-pg4ww 0 /1 Running 0 19 m# curl 10.0.192.93/hostname.html -5 c9cc9b64-pf6b7# curl 10.0.192.93/hostname.html -5 c9cc9b64-2 ssbv# 那么就证实了 svc 关联到pod实现负载均衡就必须满足2个条件。 # 1、负载的pod标签必须是svc控制器的子集匹配,或者全集 # 2、pod 必须处于就绪状态 svc才会进行提供负载均衡访问 # 查看ipvs转发规则 # ipvsadm -Ln 1.2 .1 (size = 4096 )10.0 .0 .1 :443 rr192.168 .68 .160 :6443 Masq 1 0 0 10.0 .0 .10 :53 rr10.244 .58 .204 :53 Masq 1 0 0 10.244 .58 .209 :53 Masq 1 0 0 10.0 .0 .10 :9153 rr10.244 .58 .204 :9153 Masq 1 0 0 10.244 .58 .209 :9153 Masq 1 0 0 10.0 .192 .93 :80 rr # 这个就是创建的scv的规则 rr 轮询算法 10.244 .58 .197 :80 Masq 1 0 0 # 后端服务器 10.244 .85 .224 :80 Masq 1 0 0 # 后端服务器 10.6 .2 .65 :5473 rr192.168 .68 .161 :5473 Masq 1 0 0 10.0 .0 .10 :53 rr10.244 .58 .204 :53 Masq 1 0 0 10.244 .58 .209 :53 Masq 1 0 0 # kubectl get svc 10.0 .0 .1 <none> 443 /TCP 6 d22h10.0 .192 .93 <none> 80 /TCP 71 m# kubectl get pod -o wide -5 c9cc9b64-2 ssbv 1 /1 Running 0 77 m 10.244 .58 .197 k8s-node02 <none> <none> -5 c9cc9b64-pf6b7 1 /1 Running 0 77 m 10.244 .85 .224 k8s-node01 <none> <none> -5 c9cc9b64-pg4ww 0 /1 Running 0 77 m 10.244 .85 .223 k8s-node01 <none> <none>

控制器总结 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 控制器apply 、 replace apply replace 1 、pod是处于就绪状态。2 、pod的标签是svc标签的子集(同一个命名空间下)